In-Memory OLTP has been included in the last three releases of SQL Server, starting with 2014 through 2017, and now runs on Linux, Windows, Azure SQL Database, and Azure Managed Instances. Additionally, since SQL 2016/SP1, the In-Memory OLTP feature has been available in non-enterprise editions.

What does this all mean?

It most likely means that it’s only a matter of time before a memory-optimized database lands on your doorstep, and you’ll probably have no idea how or why it’s different.

For a while now, I’ve been working on a script to evaluate a SQL Server environment for anything related to In-Memory OLTP, and I had help with testing, general suggestions, and final touches from Konstantin Taranov and Aleksey Nagorskiy; their assistance was invaluable. Konstantin suggested to Erik Darling and Brent Ozar that my script be included as part of their great Blitz series, and the the result is…..sp_BlitzInMemoryOLTP.

It is now part of the awesomeness known as the First Responder Kit, and the direct link to the script can be found here.

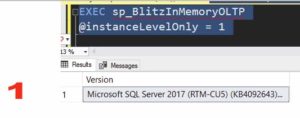

sp_BlitzInMemoryOLTP reports on two categories: instance level and database level.

First let’s discuss which parameters it sp_BlitzInMemoryOLTP accepts, and then we’ll break out the results, section by section.

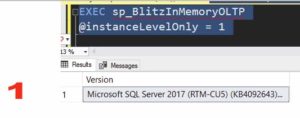

@instanceLevelOnly BIT

This flag determines whether or not to simply report on the server-level environment (if applicable, i.e. there is no server-level environment for Azure SQL Database). With this parameter, memory-optimized databases are ignored. If you specify @instanceLevelOnly and a database name, the database name is ignored.

@dbName NVARCHAR(4000) = N’ALL’

If you don’t specify a database name, then sp_BlitzInMemoryOLTP reports on all memory-optimized databases within the instance that it executes in, or in the case of Azure SQL Database, the database that you provisioned. This is because the default for the @dbName parameter is N’ALL’.

Example:

|

|

EXEC sp_BlitzInMemoryOLTP |

It’s also possible to report on a specific database name.

Example:

|

|

EXEC sp_BlitzInMemoryOLTP @dbName = N‘myInMemDB’ |

The results of calling sp_BlitzInMemoryOLTP this way are explained later in this post.

@tableName NVARCHAR(4000) = NULL

Example:

|

|

EXEC sp_BlitzInMemoryOLTP @tableName = N’myInMemtable’ |

If you only want to report on a specific memory-optimized table, you would supply a value for the @tableName parameter, and sp_BlitzInMemoryOLTP will search through all memory-optimized databases, looking for memory-optimized user tables that match. There is currently no wildcard matching for the @tableName parameter.

@debug BIT

Using the @debug =1 parameter tells sp_BlitzInMemoryOLTP to only print the TSQL statements that would have been executed. This allows you (or more likely, me) to resolve problems like missing quotes, or other potential issues that can occur when using dynamic SQL.

Example:

|

|

EXEC sp_BlitzInMemoryOLTP @debug = 1 |

Supported platforms

This script has been tested on SQL 2014, SQL 2016, SQL 2017, and Azure SQL Database. It has not been tested against Azure Managed Instances

In the comments, please let me know other things about memory-optimized environments and/or databases you’d like to see included in the script.

How to interpret the results for sp_BlitzInMemoryOLTP

When you execute sp_BlitzInMemoryOLTP, it runs several queries that pertain to the In-Memory OLTP environment. It should be noted that if there are no results for a given query, i.e. no temporal memory-optimized tables, sp_BlitzInMemoryOLTP does not return an empty result set (this keeps the clutter to a minimum).

For example, it could be that a memory-optimized filegroup has been added to a database, but no memory-optimized objects have been created. Depending on the version of SQL Server, there might not be details about the containers or files within them, so sp_BlitzInMemoryOLTP won’t return information on that.

Instance level

Instance level evaluates the following:

- the version/edition of SQL server

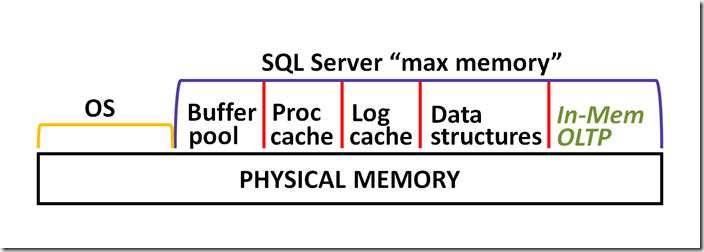

- SQL Server ‘max memory’ setting

- memory clerks

- XTP memory consumers, aggregated

- XTP memory consumers, detailed

- the value of the committed_target_kb column from sys.dm_os_sys_info

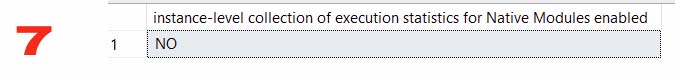

- whether or not instance-level collection of execution statistics has been enabled for all natively compiled stored procedures (because this can kill their performance….)

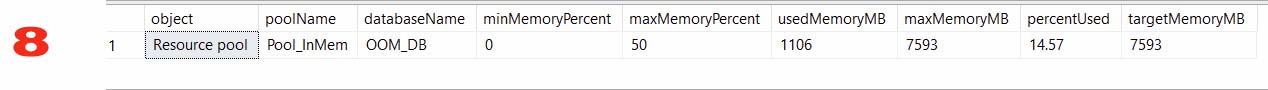

- when running Enterprise, if there are any resource groups defined, and which memory-optimized databases are bound to them

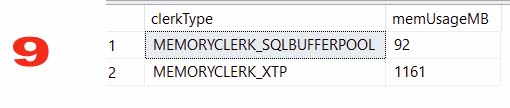

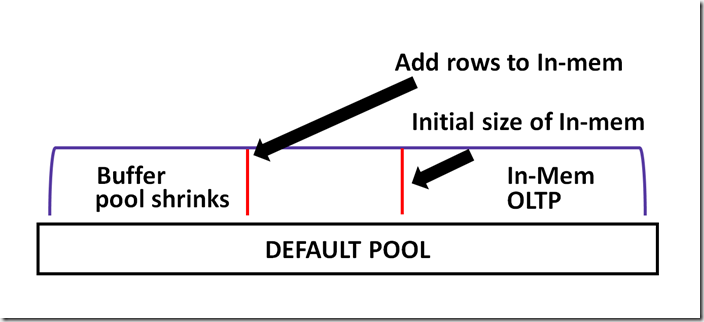

- XTP and buffer pool memory allocations, because In-Memory OLTP can affect on-disk workloads

- summary of memory used by XTP

Section 1: version/edition of SQL server

Documentation here.

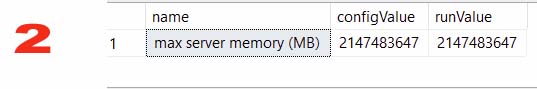

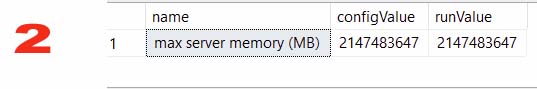

● Section 2: SQL Server ‘max memory’ setting

Documentation here.

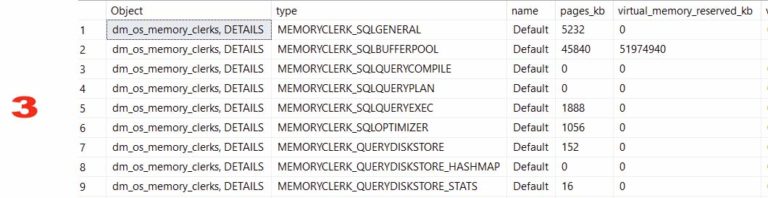

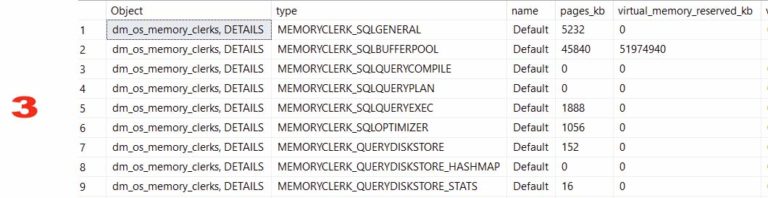

● Section 3: memory clerks

Documentation here.

● Section 4: XTP memory consumers, aggregated

Documentation here.

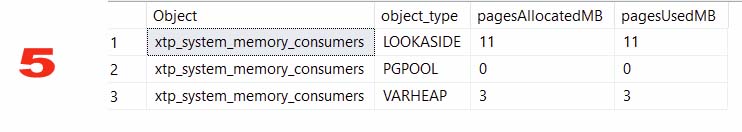

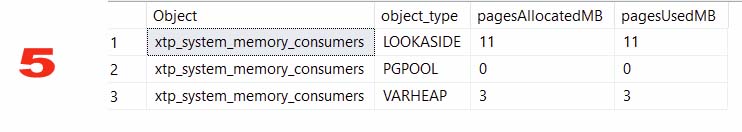

● Section 5: XTP memory consumers, detailed

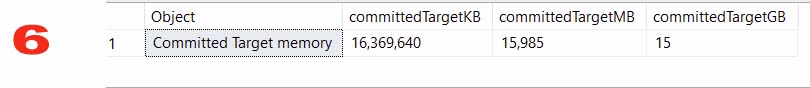

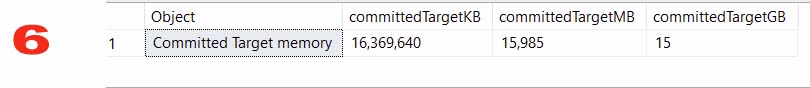

● Section 6: the value of the committed_target_kb column from sys.dm_os_sys_info. The amount of memory that SQL Server can use for the In-Memory OLTP feature is a percentage of the committed_target_kb value. But be forewarned, this value is not static. Details in my post here.

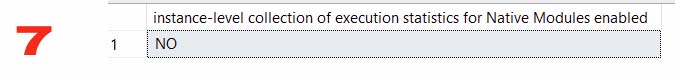

● Section 7: whether or not instance-level collection of execution statistics has been enabled for all natively compiled stored procedures. Enabling this on a production server could be considered drastic. More details can be found in my post here.

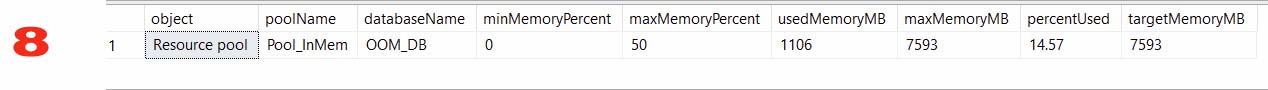

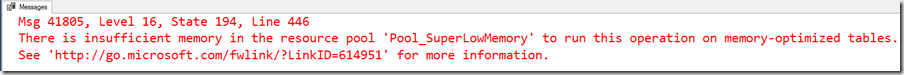

● Section 8: if running Enterprise, if there are any resource groups defined, and which memory-optimized databases are bound to them. Binding memory-optimized database to a Resource Pool (using Resource Governor) is considered a best practice, but unfortunately this capability is still Enterprise only. But if you’re on that edition, you should also be monitoring how close to the out of memory threshold you’re getting, and fire an alert when required. More details in my post here.

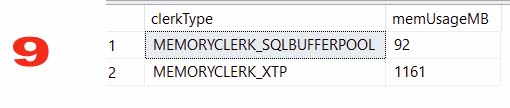

● Section 9: XTP and buffer pool memory allocations, because In-Memory OLTP can affect on-disk workloads

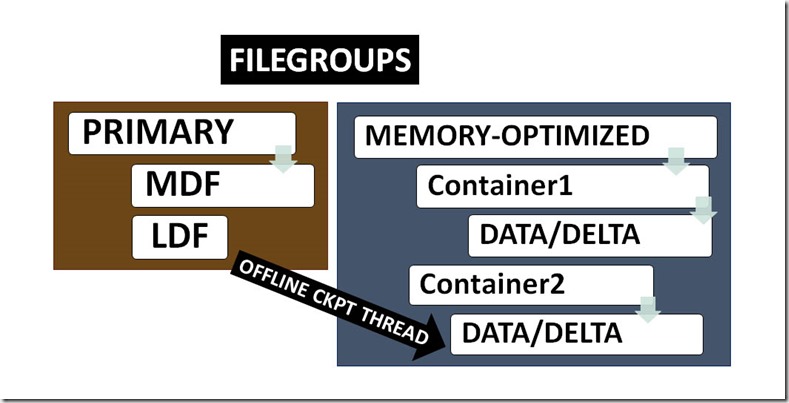

Database level

For a given memory-optimized database (or all memory-optimized databases), database level evaluates the following:

- all memory-optimized tables

- all indexes on all memory-optimized tables

- the average chain length for HASH indexes (and informs you if the bucket count is too low)

- the number of indexes per memory-optimized table

- all natively compiled stored procedures

- which native modules are loaded (stored procedures only, and this is not relevant for Azure SQL Database)

- the number of natively compiled procedures

- whether or not the collection of execution statistics is enabled for any natively compiled procedures

- if using the temporal feature for memory-optimized tables, the amount of memory consumed by hidden temporal internal tables (which are memory-optimized)

- memory structures for LOB columns (off-row)

- all memory-optimized table types

- database layout, which includes mdf, ldf, ndf, and containers, and the size in various formats (KB/MB/GB). The totalSizeMB column is the total for the entire database (uses a Window Function).

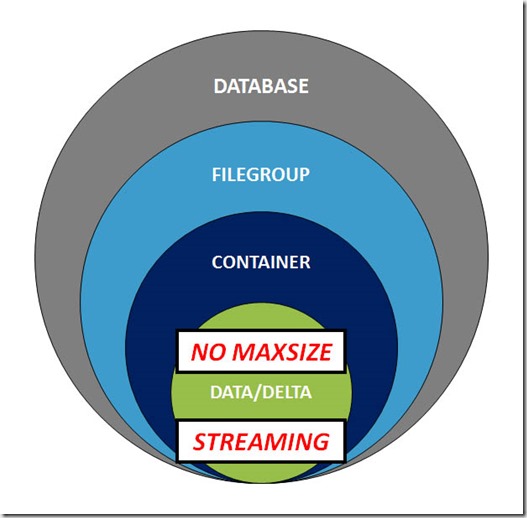

Three separate result sets that describe containers:

- Container details by container name

- Container details by fileType and fileState

- Container file details by container_id, fileType and fileState

For Azure SQL Database, sp_BlitzInMemoryOLTP:

- verifies if you are running on the Premium tier (that’s the only tier that supports In-Memory OLTP)

- displays all records for xtp_storage_percent, in descending order (more info here)

- displays the status of XTP_PROCEDURE_EXECUTION_STATISTICS and XTP_QUERY_EXECUTION_STATISTICS (more info here)

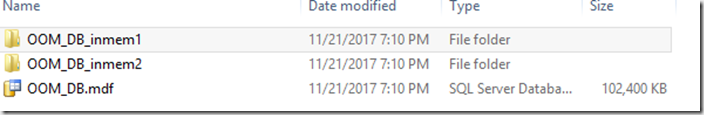

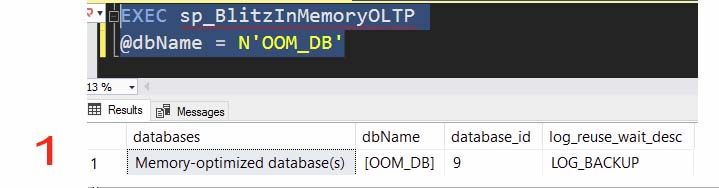

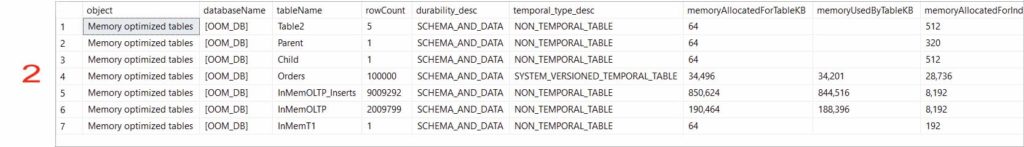

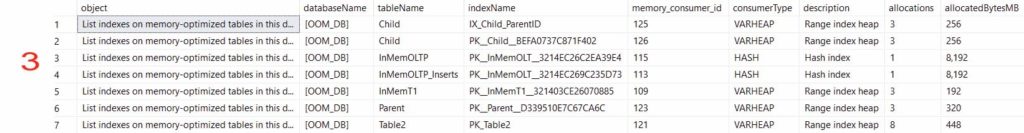

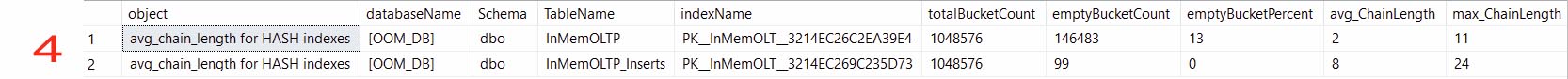

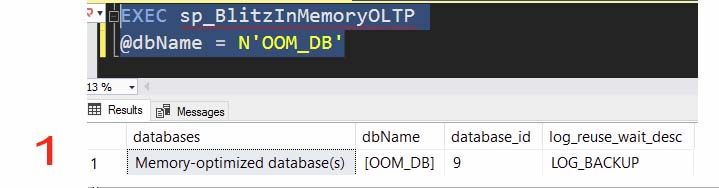

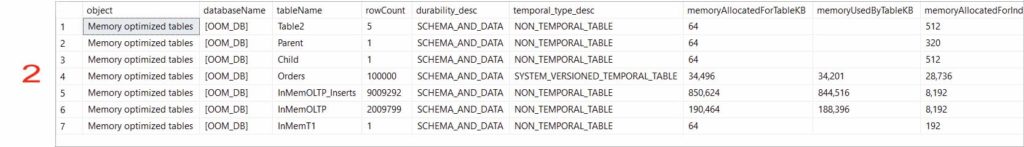

The output in the photos that follow was returned from executing sp_BlitzInMemoryOLTP, for a database named OOM-DB. You can get information on all memory-optimized databases if you don’t supply a database name when calling sp_BlitzInMemoryOLTP.

● Section 1: Listing of memory-optimized databases on this instance of SQL Server

· Section 2: memory-optimized tables, including row counts

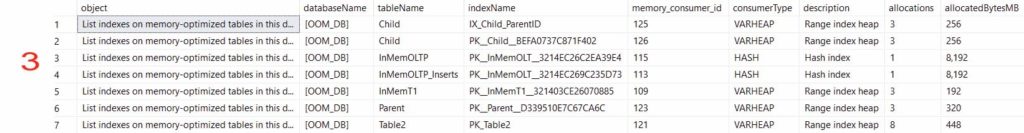

● Section 3: indexes on memory-optimized tables. It’s helpful to know how many, and what type of indexes there are.

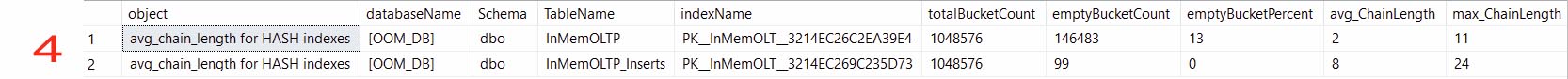

● Section 4: average chain length for HASH indexes (if any). When a HASH index is created for a memory-optimized table, a value must be supplied for what’s known as the “bucket count”. But it doesn’t get adjusted automatically, and as a result, it can cause performance problems. More details here.

● Section 5: Number of indexes per memory-optimized table. SQL 2014 and SQL 2016 have a limit of 8 nonclustered (RANGE) indexes per memory-optimized table. That ceiling was lifted in SQL 2017, and I’ve tested creating several hundred indexes on a single memory-optimized table (but please don’t do that in production!).

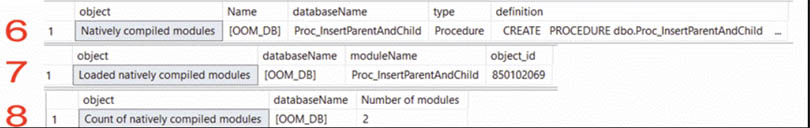

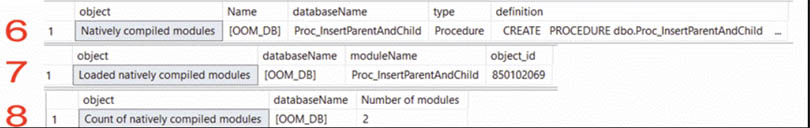

Sections 6 through 8:

- natively compiled stored procedures

- which natively compiled stored procedures are currently loaded

- how many natively compiled stored procedures there are

● Section 9: if using the temporal feature for memory-optimized tables, the amount of memory consumed by hidden temporal internal tables (which are memory-optimized). For temporal tables, there’s a difference between how things are handled if the temporal table is memory-optimized. I’ve written about that in this post.

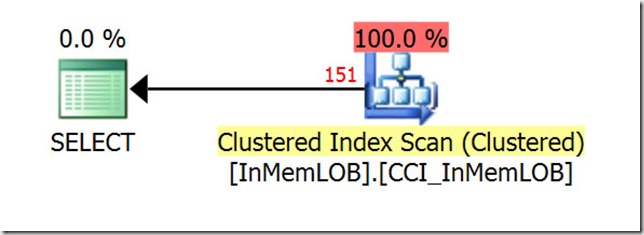

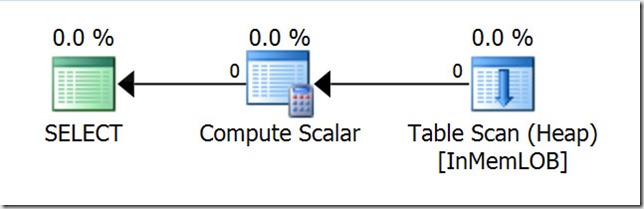

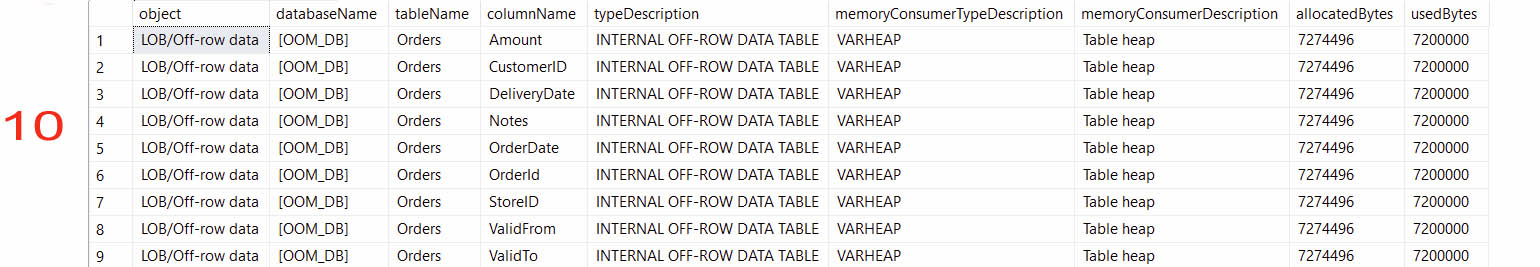

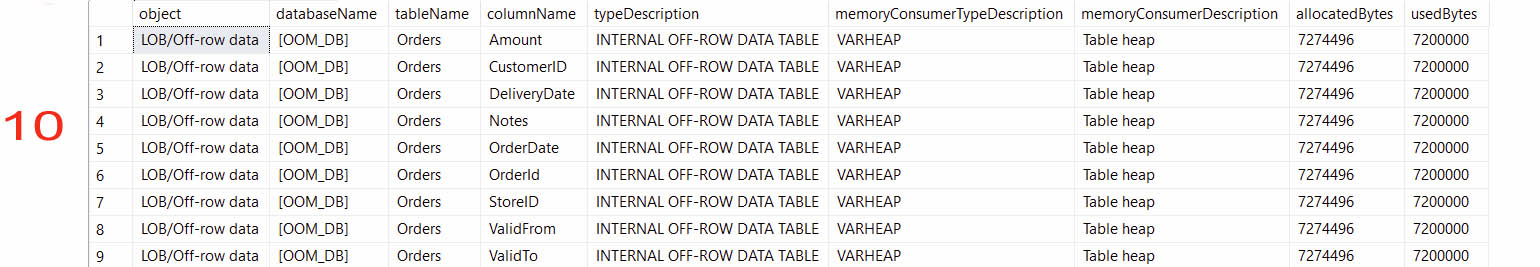

● Section 10: memory structures for LOB columns (off-row). For memory-optimized tables, LOB columns are actually stored as separate tables, and this can lead to performance problems. MCM Dimitri Korotkovitch has a great post on it here.

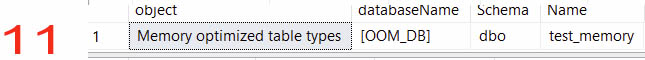

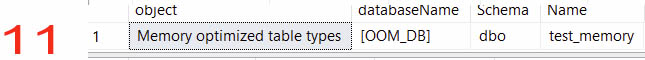

● Section 11: memory-optimized table types. Yes, tables and table types can be memory-optimized, and you’ll want to be aware of the potential gotchas with those memory-optimized types, as detailed in my post.

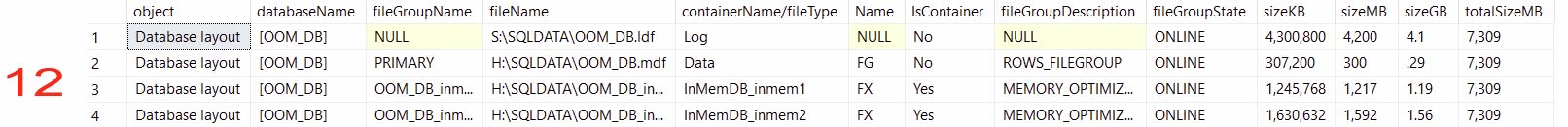

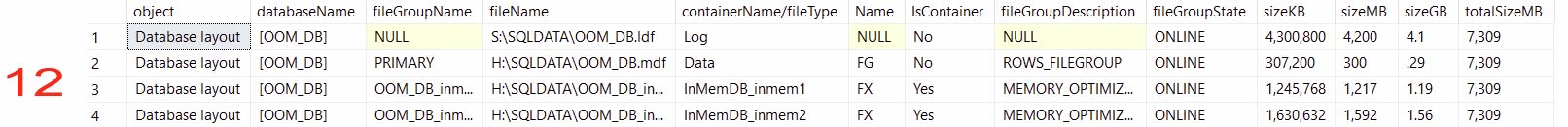

● Section 12: all database files, including the name, size, and location for each container.

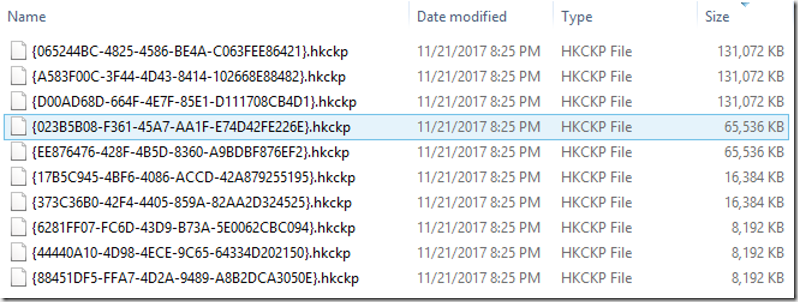

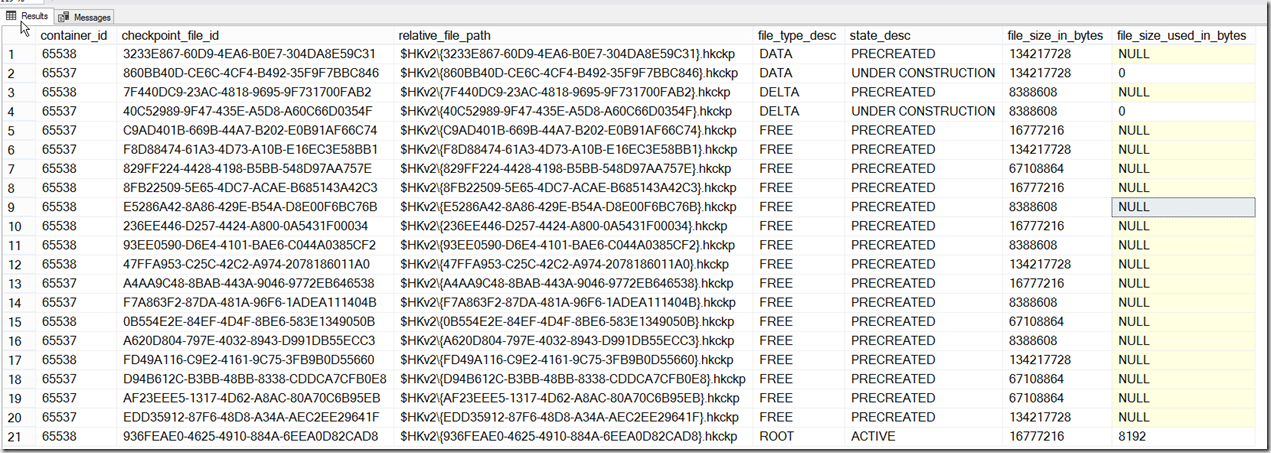

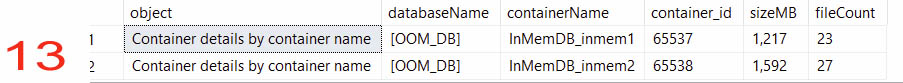

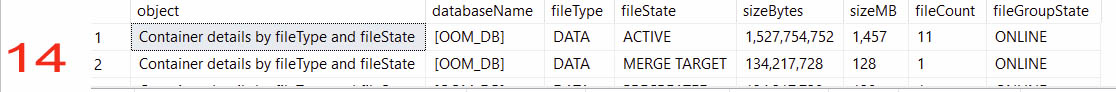

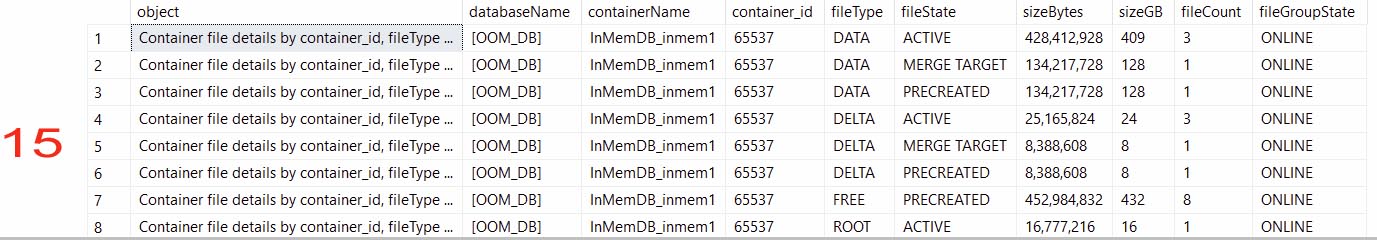

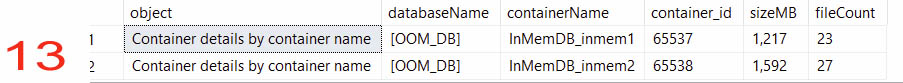

Sections 13 through 15 pertain to the amount of storage consumed by durable memory-optimized tables. The files that persist durable data to storage go through several state changes over time. As a result, the storage footprint for memory-optimized databases that contain durable data can be surprisingly large, relative to the amount of data that’s stored in memory (Microsoft suggest 4x memory-optimized data size as a starting point). So it’s a good idea to keep an eye on the storage footprint.

● Section 13: Container details by container name

One row per container, listing the aggregated size of all files within that container, as well as how many files per container

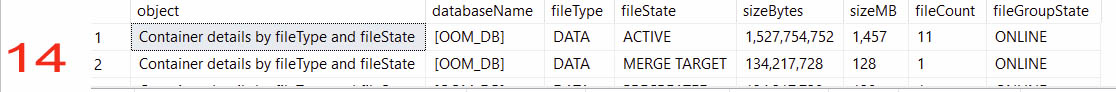

● Section 14: Container details by fileType and fileState

Here, the breakdown is a bit different, taking into account the type of file.

For each type of file, i.e. DATA or DELTA, aggregate the storage consumed and number of files for each file type, across ALL containers for this database. For example, there are a total of 11 files of fileType DATA with a fileState of ACTIVE, across all containers for this memory-optimized database. (Note that SQL 2014 has file types that don’t exist in later versions of SQL Server)

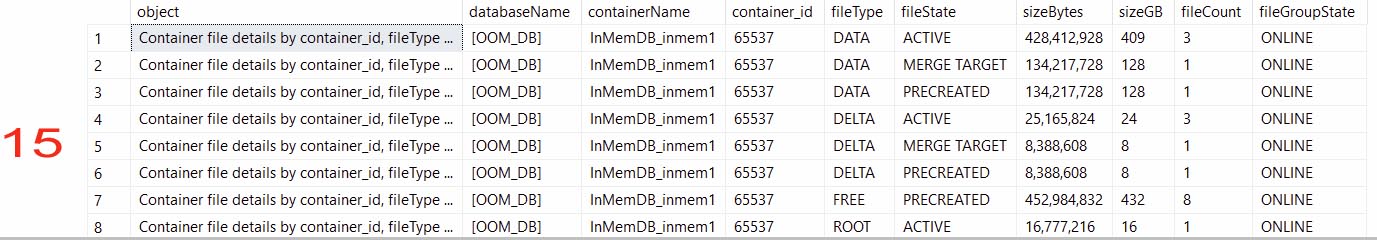

● Section 15: Container file details by container_id, fileType and fileState

For each type of file, i.e. DATA or DELTA, aggregated the storage consumed and number of files for each file type, PER CONTAINER.

In the prior example, we saw that there were a total of 11 files of fileType DATA with a fileState of ACTIVE, across all containers for this memory-optimized databases.

This result shows the breakdown of each fileType and fileState PER CONTAINER. The container named InMemDB_inmem1 has 3 files that have a fileType of DATA and a fileState of ACTIVE. So we expect to see 8 more files with this type and state, in the remaining containers. Sure enough, we see that the container named InMemDB_inmem2 has an additional 8 files with a fileType of DATA and a fileState of ACTIVE.

Understanding how In-Memory OLTP works (with all of its various gotchas) can only be addressed by putting in the required time. If you read the documentation, and then study the real-world deployment concepts detailed in my extensive blog post series on In-Memory OLTP, you’ll be on the right path. Once you begin to wrap your brain around In-Memory OLTP, you’ll need some help evaluating memory-optimized environments and/or databases, and that’s where sp_BlitzInMemoryOLTP can help.

![image_thumb[389]_thumb image_thumb[389]_thumb](http://nedotter.com/wp-content/uploads/2017/11/image_thumb389_thumb_thumb.png)

![image_thumb[26] image_thumb[26]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb26_thumb.png)

![image_thumb[29] image_thumb[29]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb29_thumb.png)

![image_thumb[31] image_thumb[31]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb31_thumb.png)

![image_thumb[33] image_thumb[33]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb33_thumb.png)