As part of a conversation on the #sqlhelp, I was giving feedback to a member of the Microsoft Tiger team about how In-Memory OLTP might be improved. One of my responses was about implementing a hot/cold data solution, and Adam Machanic (b|t) responded, suggesting that partitioned views might work. I had already tested partitioned views and memory-optimized tables for queries, and confirmed that it worked as expected, but I had never tested DML for this combination of features.

Based on my new testing, I can state with confidence that partitioned view support for DML with memory-optimized tables will properly handle INSERT and DELETE operations, but some UPDATE operations might fail, and that failure occurs in a most ungraceful way. After verifying that this is an issue on SQL 2016 SP1/CU3 and SQL 2017 CTP2.1, I filed this Connect item. Microsoft has confirmed this issue, but has not yet determined how it will be fixed. For example, they could decide to disallow all UPDATEs when a memory-optimized table belongs to a partitioned view, or instead decide to support it under limited circumstances. I’ll update this post when I have more detail from Microsoft.

Let’s assume that we have two tables that belong to a partitioned view. Both tables can be memory-optimized, or one table can be memory-optimized, and the other on-disk.

Success condition

an UPDATE occurs to a row in a table, and the UPDATE does not change where the row would reside, i.e. does not cause it to “move” to another table, based on defined CONSTRAINTS

Failure conditions:

a. UPDATE occurs to a row in the memory-optimized table that causes it to move to either another memory-optimized table, or a on-disk table

b. UPDATE occurs to a row in the on-disk table that causes it to move to the memory-optimized table

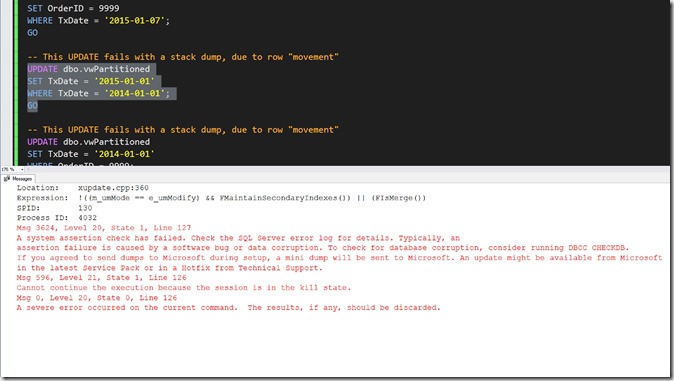

The failure looks like this:

Repro script:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 |

/* Repro for UPDATEs on a partitioned view */ SET NOCOUNT ON DROP VIEW IF EXISTS dbo.vwPartitioned; GO DROP TABLE IF EXISTS dbo.Table1; GO DROP TABLE IF EXISTS dbo.Table2; GO CREATE TABLE dbo.Table1 ( TxDate DATE ,OrderID INT CONSTRAINT PK_Table1 PRIMARY KEY NONCLUSTERED (TxDate, OrderID) ) --WITH (DURABILITY = SCHEMA_AND_DATA, MEMORY_OPTIMIZED = ON); GO CREATE TABLE dbo.Table2 ( TxDate DATE ,OrderID INT CONSTRAINT PK_Table2 PRIMARY KEY NONCLUSTERED (TxDate, OrderID) ) WITH (DURABILITY = SCHEMA_AND_DATA, MEMORY_OPTIMIZED = ON); GO INSERT dbo.Table1 ( TxDate ,OrderID ) VALUES ('2014-01-01', 1) ,('2014-01-10', 2) ,('2014-03-15', 3) ,('2014-07-20', 4); INSERT dbo.Table2 ( TxDate ,OrderID ) VALUES ('2015-01-07', 5) ,('2015-02-10', 6) ,('2015-03-18', 7) ,('2015-10-28', 8); GO CREATE VIEW dbo.vwPartitioned WITH SCHEMABINDING AS ( SELECT TxDate ,OrderID FROM dbo.Table1 UNION ALL SELECT TxDate ,OrderID FROM dbo.Table2 ); GO SET STATISTICS IO ON; GO ALTER TABLE dbo.Table2 ADD CONSTRAINT CC_Table2_TxDate CHECK (TxDate >= '2015-01-01'); GO ALTER TABLE dbo.Table1 ADD CONSTRAINT CC_Table1_TxDate CHECK (TxDate < '2015-01-01'); GO ALTER VIEW dbo.vwPartitioned WITH SCHEMABINDING AS ( SELECT TxDate ,OrderID FROM dbo.Table1 UNION ALL SELECT TxDate ,OrderID FROM dbo.Table2 ); GO INSERT dbo.vwPartitioned ( TxDate ,OrderID ) VALUES ('2014-01-13', 91) ,('2015-01-08', 85); GO UPDATE dbo.vwPartitioned SET OrderID = 2 WHERE TxDate = '2014-01-13'; GO DELETE dbo.vwPartitioned WHERE TxDate = '2014-01-13'; GO -- check location of rows SELECT * FROM dbo.Table2 ORDER BY OrderID GO SELECT * FROM dbo.Table1 ORDER BY OrderID GO -- This UPDATE succeeds, because it does NOT attempt to "move" the row to another table UPDATE dbo.vwPartitioned SET OrderID = 9999 WHERE TxDate = '2015-01-07'; GO -- This UPDATE fails with a stack dump, due to row "movement" UPDATE dbo.vwPartitioned SET TxDate = '2015-01-01' WHERE TxDate = '2014-01-01'; GO -- This UPDATE fails with a stack dump, due to row "movement" UPDATE dbo.vwPartitioned SET TxDate = '2014-01-01' WHERE OrderID = 9999; GO -- check location of rows SELECT * FROM dbo.Table2 ORDER BY OrderID GO SELECT * FROM dbo.Table1 ORDER BY OrderID |