In Part 1, we created a memory-optimized database, and explained the different states that CFPs can have.

In this post, we’ll take note of the changes to free space on the volumes that host our containers, before/after creating a memory-optimized table.

To begin with, we can see that the folder currently consumes 100MB of storage, because that’s how much we allocated for the MDF file when we created our database.

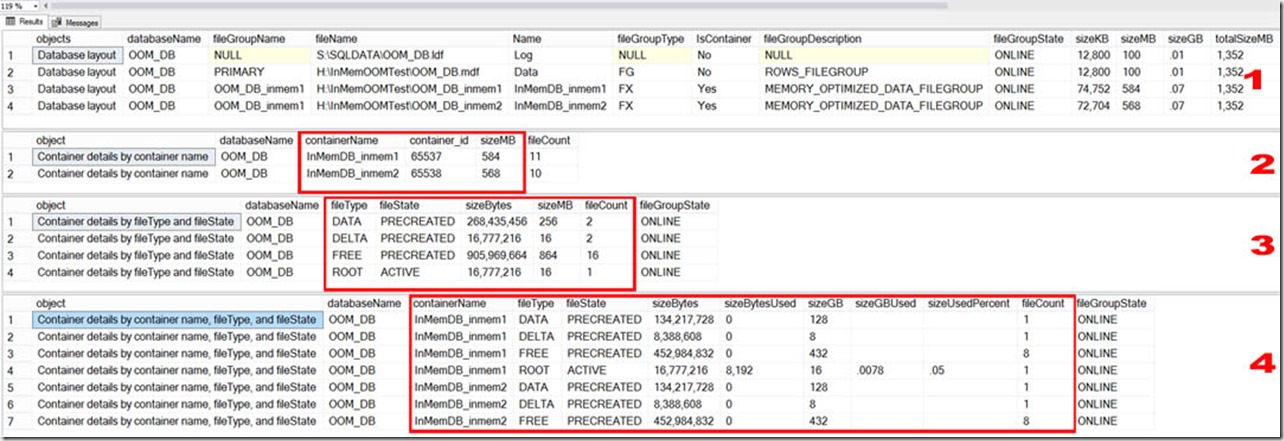

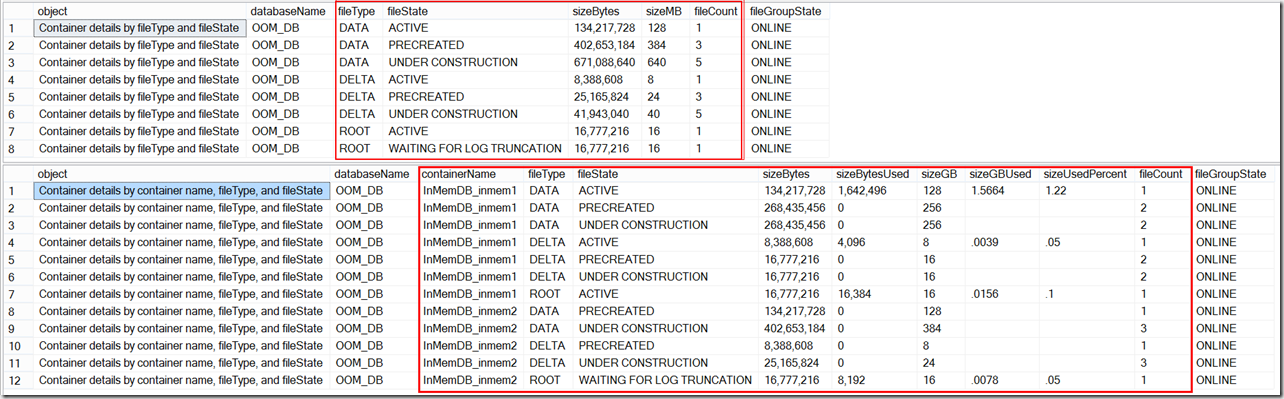

I’ve written a script that among other things, displays summary and detail information about memory-optimized databases, which can be found here. After changing the script to only give us details about the OOM_DB database, the relevant sections are listed below. In the somewhat voluminous output from the script, you can scroll down to the section entitled “’Database layout”.

We should note the following:

- First, the general database layout is reported, which includes all mdf and ldf files, as well as listing all containers. If you are not familiar with what a container is, please go back to Part 1.

- The second section displays details about each container, i.e. how large it is, and how many files reside there.

- Next we drill further down, and summarize at the fileType and fileState level, regardless of which container the file belong to.

- And finally, for each container, we detail the number of files, and aggregate the amount of storage consumed, per fileType and fileState.

This information is extremely valuable when assessing the storage state of a memory-optimized database.

The containers consume 584MB and 568MB respectively (but after running these tests several times, it seems that the numbers fluctuate slightly), and all of the files in each container are “PRECREATED”. As we mentioned in Part 1, as a performance optimization, the In-Memory engine precreates files, and this has some interesting implications, which we’ll see later on.

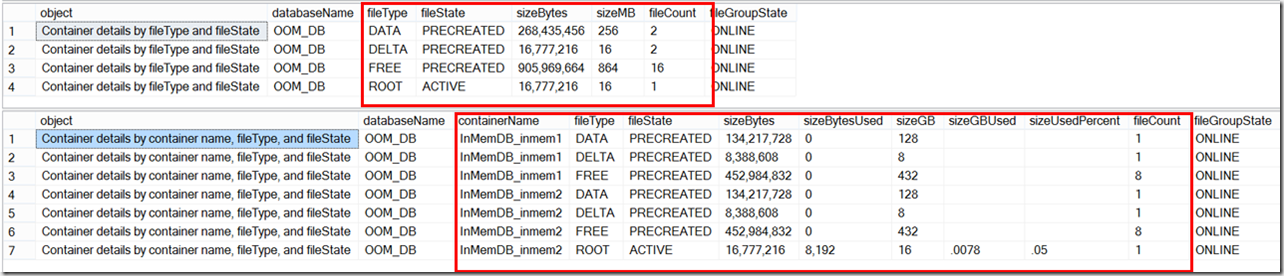

The image above is what you’ll see when you have created a memory-optimized database, and created at least one memory-optimized table. But as I said earlier, if you’ve only created a memory-optimized database, the containers will be empty.

Let’s create our table (which is specifically designed to consume a lot of memory for each row):

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

USE OOM_DB GO CREATE TABLE dbo.InMemTable1 ( rowKey INT IDENTITY PRIMARY KEY NONCLUSTERED ,col01 CHAR(8000) NOT NULL ,col02 CHAR(8000) NOT NULL ,col03 CHAR(8000) NOT NULL ,col04 CHAR(8000) NOT NULL ,col05 CHAR(8000) NOT NULL ,col06 CHAR(8000) NOT NULL ,col07 CHAR(8000) NOT NULL ,col08 CHAR(8000) NOT NULL ,col09 CHAR(8000) NOT NULL ,col10 CHAR(8000) NOT NULL ,col11 CHAR(8000) NOT NULL ,col12 CHAR(8000) NOT NULL ,col13 CHAR(8000) NOT NULL ,col14 CHAR(8000) NOT NULL ,col15 CHAR(8000) NOT NULL ,col16 CHAR(8000) NOT NULL ,col17 CHAR(8000) NOT NULL ,col18 CHAR(8000) NOT NULL ,col19 CHAR(8000) NOT NULL ,col20 CHAR(8000) NOT NULL ) WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA) |

I ran the diagnostic script again, and now you can see that all of the data and delta files are PRECREATED, because none of them have been populated yet.

Let’s INSERT 10 rows, and run the diagnostic script again.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

SET NOCOUNT ON; DECLARE @stringVal VARCHAR(8000) = REPLICATE('A', 8000) INSERT dbo.InMemTable1 ( col01, col02, col03, col04, col05, col06, col07, col08, col09, col10,col11, col12, col13, col14,col15, col16, col17, col18, col19, col20 ) VALUES ( @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal ,@stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal ,@stringVal, @stringVal ) GO 10 |

GO 10

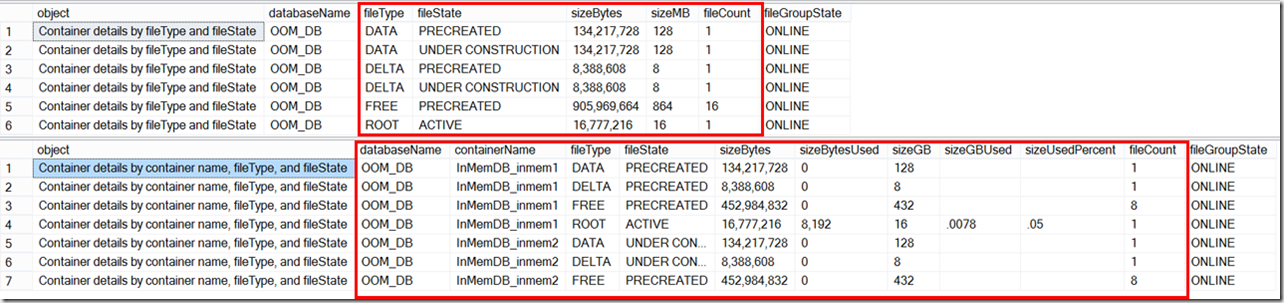

After adding 10 rows, we have:

It’s clear that before we inserted any data, we had 20 files that were in the PRECREATED state. After inserting 10 rows, we now have 18 PRECREATED files, and 2 UNDER CONSTRUCTION files, which means the In-Memory engine is populating these files, that they are “open” in terms of their CHECKPOINT status. If you don’t understand what these terms mean, please read Part 1.

But there’s one thing that doesn’t look right here: we’ve inserted data into the table, but sizeBytesUsed is still zero for the UNDER CONSTRUCTION files. Why is that?

The Microsoft documentation explains it:

“file_size_used_in_bytes: for checkpoint file pairs that are still being populated, this column will be updated after the next checkpoint.”

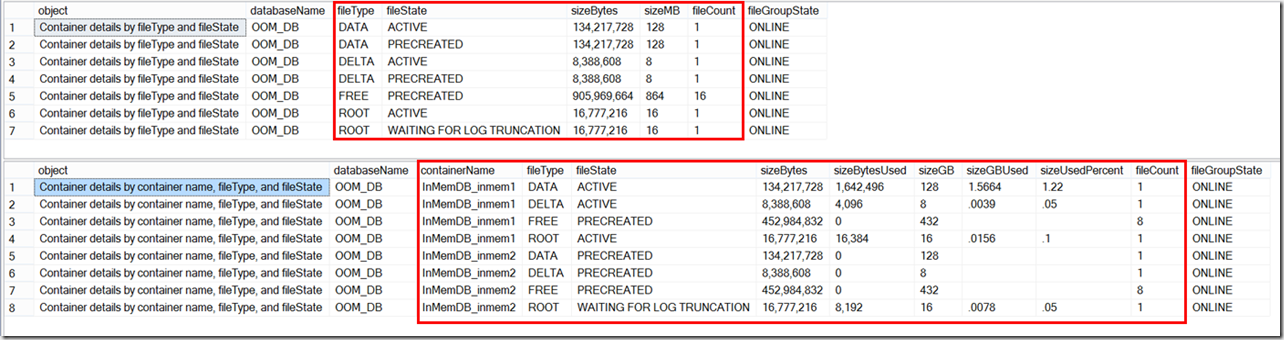

After executing a manual CHECKPOINT, the following image show the before/after state of our memory-optimized database. We can see the difference: we now have values for the sizeBytesUsed column for the UNDER CONSTRUCTION rows.

Please note:

- The ‘Container details by fileType and fileState’ had only PRECREATED AND UNDER CONSTRUCTION data and delta files

- All files that were UNDER CONSTRUCTION before executing the manual CHECKPOINT are now ACTIVE. This was discussed in Part 1 – when a CHECKPOINT occurs, the files that were being populated are now closed to further entries. This happens automatically, but sometimes you need to do it manually (more on that in a future post).

- In the ‘Before’ image, data and delta files have two states, ACTIVE and PRECREATED.

- In the ‘After’ image, data and delta files have three states, ACTIVE and PRECREATED, and UNDER CONSTRUCTION.

- For the first time, we’re seeing the fileState of ‘WAITING FOR LOG TRUNCATION’( which we’ll explain in Part 3)

Before #################

After #################

In Part 3, we’ll dive deeper into IOPS and free space requirements, and how to reset the database status when all volumes run out of free space.

![image_thumb[405] image_thumb[405]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb405_thumb.png)

![image_thumb[406] image_thumb[406]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb406_thumb.png)