f you pay attention to hardware innovations as they relate to SQL Server, you’ve probably heard of PMEM, aka Persistent Memory, or Storage Class Memory. I blogged about PMEM in October of 2016, when HP Gen9 servers first started to support it (my post is here). If you are unfamiliar with PMEM, I suggest you read that post and watch the linked videos, before reading further.

With the impending release of SQL 2019, things are about to change in potentially big ways with regard to drastically reducing storage latency.

But first let’s reiterate how PMEM is supported on SQL Server 2016 running on Windows Server 2016.

SQL 2016 supports placing the “tail of the log” on a PMEM device (NVDIMM-N modules), which had the effect of reducing or eliminating WRITELOG waits. I was quite interested in that specific wait type, because of my keen interest in the In-Memory OLTP feature. If by deploying Hekaton you removed some of the bottlenecks associated with the traditional engine, usually the next bottleneck that appeared was the transaction log itself, in the form of WRITELOG waits. The log could keep up when the traditional engine was the bottleneck, but PMEM can bypass the storage stack, and it often eliminates or reduces transaction log latency. However, PMEM cannot do this alone — it must be used in conjunction with an application specifically written to leverage its capabilities (like SQL Server).

One issue for adopting PMEM for tail of the log on Windows Server 2016 is the fact that there is no support for TDE (but see the Hybrid Buffer Pool section below). Also, in the first release of PMEM from HP, there was limited capacity. This was not an issue because the tail of the log only consumes about 20MB per database (that’s not a typo, it’s megabytes), and the minimum size of a NVDIMM-N module was 8GB. For tail of the log on Windows Server 2016, you had way more PMEM capacity than required.

General restrictions/potential issues with deploying NVDIMM-N PMEM (not specific to any version of Windows Server or SQL Server) include:

-

it occupies memory slots that could otherwise be used by DRAM, thereby reducing the total memory capacity on the server

-

It’s not compatible with LRDIMMS

HP now makes NVDIMMs that operate at two different speeds. If using NVDIMMs that are slower than DRAM, all memory on the server will run at the slower speed. HP Gen10 servers support NVDIMMs that run at 2666Mhz, which matches the upper clock speed of RDIMMs (which they are compatible with). In that case, there should not be any server-level reduction in memory speed.

PMEM can be formatted as block storage – which will always navigate the storage stack – or as DAX (Direct Access) – which bypasses the storage stack. PMEM formatted as DAX can be considerably faster than when formatted as block storage (see section on TDE below, about DAX formatted PMEM still navigating the storage stack). Files placed on a DAX formatted volume become “memory-mapped”, and are byte-addressable.

Intel Optane DC Persistent Memory

Intel has recently joined the field, offering PMEM in the form of Intel Optane DC Persistent Memory, which has capacities large enough to host database files. It can operate in three modes:

-

Memory mode – in this mode, Optane will not be persistent – data will be lost if there is a power failure or server shutdown/boot. Actual DRAM on the server is used as a hot tier with the memory capacity seen by the OS equivalent to the amount of Optane PMEM on the server.

-

App Direct – this mode is used to persist data without navigating the storage stack, in conjunction with an application designed to take advantage of it (like SQL Server).

-

Mixed mode – allocate some capacity to memory mode and some to App Direct mode.

At MS Ignite 2018, Cosmos Darwin demonstrated a reference architecture that used Intel Optane DC, and it achieved almost 14 million IOPS (the Ignite video can be seen here).

Anandtech reviewed a 512GB Intel Optane (Apache Pass) module here. Another thing to be aware of is that in order to use Intel Optane modules, your server must be running Cascade Lake CPUs (like Coffee Lake).

SQL version support for PMEM

SQL 2017 on Windows Server 2016 behaves the same as SQL 2016 on Windows Server 2016 – “tail of the log” is supported. However, there is no support for PMEM with SQL 2017 on supported Linux distributions (except as a traditional block store). Using PMEM with SQL 2019 on Linux supports what’s known as “enlightenment”, which allows us to place data and log files on DAX formatted volumes, thereby reducing latency considerably. SQL 2019 on Linux also support “tail of the log”.

To recap, for NVDIMM-N and Optane:

-

Windows Server 2016/Windows Server 2019 tail of the log: supported for SQL 2016, SQL 2017, and SQL 2019

-

Windows Server enlightenment: not available

-

Linux tail of the log: supported on SQL 2019

-

Linux enlightenment: supported for data and log files on SQL 2019

Hybrid Buffer Pool

As of SQL 2019 CTP 3.0, PMEM can be used with SQL Server 2019 running on Windows Server 2019 or supported Linux distributions to create what’s known as a Hybrid Buffer Pool. Think of it as a combination of traditional buffer pool memory (but for clean pages only), plus memory-mapped files. If the pages are clean, the engine will either reference the relevant pages in the traditional area of the hybrid buffer pool, or memory-mapped files on PMEM. This will be transparent to the user and/or application, but DBAs and application developers should be aware of the potential repercussions (the documentation for the Hybrid Buffer Pool can be found here).

Earlier in this post, I mentioned that TDE is not compatible with PMEM for Windows Server 2016, for which the only PMEM use case was tail of the log. However, if you use the Hybrid Buffer Pool, it’s now possible to use TDE if PMEM is installed (for both Windows Server 2019 and supported Linux distributions).

If we use enlightenment, data and log files would be memory-mapped, circumventing the storage stack – BUT – if you then enable TDE, even though the files are memory-mapped, they will be accessed as a block device, which requires navigating the storage stack. In this scenario, it might not make sense to have placed your data files on an enlightened device. But this should be verified though rigorous testing.

In addition to TDE, other features/settings that cause SQL Server to navigate the storage stack for DAX formatted PMEM are:

-

page verification of torn page

-

anything that uses the persistent version store (the only feature I’m aware of in SQL 2019 that uses PVS is Accelerated Database Recovery)

Some astute readers might be wondering why you’d want to use enlightenment on Linux, and not just use HBP from the start. Keep in mind that as stated above, the Hybrid Buffer Pool is only for clean pages that don’t exist in the buffer pool, so that’s a limitation you’ll have to consider.

Another way to think of it is that the Hybrid Buffer Pool is ideal for a workload that is very heavy on the read side (normally causing buffer pool thrashing or needing to access lots of pages outside the buffer pool), while enlightenment can optimize both reads and writes.

It’s not known at this time if the Hybrid Buffer Pool will be an Enterprise-only feature.

Syntax

Enabling the Hybrid Buffer Pool can be done at the instance or database level.

Instance-level syntax:

ALTER SERVER CONFIGURATION SET MEMORY_OPTIMIZED HYBRID_BUFFER_POOL = ON;

ALTER SERVER CONFIGURATION SET MEMORY_OPTIMIZED HYBRID_BUFFER_POOL = OFF;

Database-level syntax:

ALTER DATABASE <databaseName> SET MEMORY_OPTIMIZED = ON;

ALTER DATABASE <databaseName> SET MEMORY_OPTIMIZED = OFF;

Despite the fact that “memory-optimized” appears in this syntax, it should be noted that enabling/disabling an instance or database for Hybrid Buffer Pool has nothing to do with the In-Memory OLTP feature, which the documentation may also refer to as “memory-optimized” databases.

Also potentially significant is the fact that the syntax for enabling/disabling Hybrid Buffer Pool may change before RTM.

Cost

If you check the pricing on the web, you can see that 128GB Optane PMEM costs around $2,000, 256GB costs almost $7,000, and 512GB costs over $21,000. And don’t forget, that does not include the cost of Xeon Gen 2 CPUs, which are required.

Xeon Gen2 processors are listed here, with core counts from 4 to 56.

Supermicro supports Xeon Gen2 CPUs, and pricing for one of their servers can be seen here:

At that link, support for larger amounts of memory costs between $21,000 and $42,000 per cpu, and the fastest CPUs have a very high core-count (often 24 to 56 cores).

When you add up the cost of PMEM, the required Xeon Gen 2 CPUs, and SQL Server licensing, adoption of Optane Persistent memory may not be feasible for a lot of workloads that might benefit from its usage. You might start asking yourself if using NVMe storage and more memory would be a better fit budget-wise.

Availability Groups in general

For SQL Server workloads with extremely high throughput requirements that also require HA (and which workloads don’t?), it’s likely that PMEM will expose the fact that HA underpinnings can be a bottleneck, specifically for synchronous Availability Groups.. This may even prevent adoption of PMEM, until a suitable HA transport is available. To my knowledge, no database vendors currently have a solution to this problem; PMEM can be so blazingly fast, everything else in the chain is a bottleneck, including networking and HA transport. I have been advised that push mode RDMA might be a solution on the networking side of things, but I don’t believe that will be available for a while. Allan Hirt blogged about using RDMA for AGs here.

Due to the way the log transport mechanism is architected, PMEM usage with tail of the log for a database that belongs to an Availability Group is supported on secondary replicas only.

That means in order to achieve the same performance in case of failover, you would have to install PMEM on all replicas, in case they must operate as a secondary replica at some point. This would potentially drive up the price of adopting PMEM with Availability Groups and FCIs, especially if using Optane PMEM.

Availability Groups, enlightenment, and Hybrid Buffer Pool

On supported platforms (Linux), both enlightenment and Hybrid Buffer Pool are supported.

In-Memory OLTP and Enlightenment

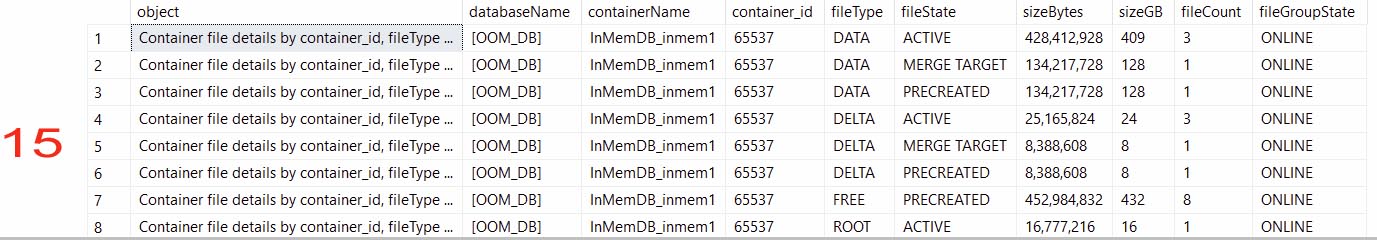

As of this writing, enlightenment will not be supported for data/delta files (also known as checkpoint file pairs) for the In-Memory OLTP feature.

Gratitude

In November of 2018, PMEM – as it pertains to SQL Server – was owned by Argenis Fernandez (t) of the SQL Server Tiger Team, and I am greatly indebted to him for taking the time to explain the nuances of SQL Server interaction with PMEM, and how Microsoft envisions its usage in SQL 2019. I must also thank my friend Brian Carrig (t), who currently owns PMEM on the Tiger Team, for his patience in confirming what I believed to be correct, reorienting me when I was off-base, and resolving answers to my endless questions. Without either of these generous and brilliant technologists, this blog post doesn’t get written (thanks, gents!).

Finale

These are exciting times for DBAs, architects, and developers, and the evolution of PMEM provides choices that were not envisioned even just a few years ago. I look forward to seeing how this space evolves, as the technology matures, and applications are architected to leverage it. SQL Server integration with PMEM is a very forward looking move on the part of the SQL Server team.

![image_thumb[389]_thumb image_thumb[389]_thumb](http://nedotter.com/wp-content/uploads/2017/11/image_thumb389_thumb_thumb.png)

![image_thumb[26] image_thumb[26]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb26_thumb.png)

![image_thumb[29] image_thumb[29]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb29_thumb.png)

![image_thumb[31] image_thumb[31]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb31_thumb.png)

![image_thumb[33] image_thumb[33]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb33_thumb.png)