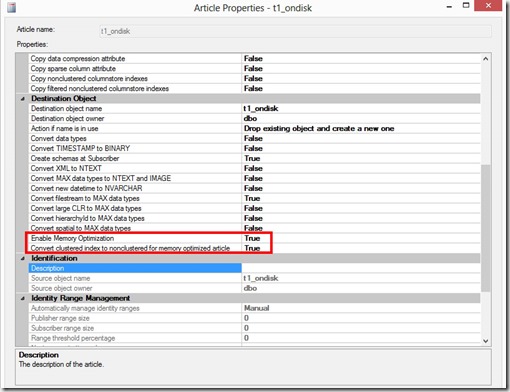

This blog post demonstrates various approaches when using native compilation to insert rows into parent/child tables.

First, let’s create tables named Parent and Child, and relate them with a FOREIGN KEY constraint. Note that the Parent table uses the IDENTITY property for the PRIMARY KEY column.

DROP TABLE IF EXISTS dbo.Child

GO

DROP TABLE IF EXISTS dbo.Parent

GO

CREATE TABLE dbo.Parent

(

ParentID INT IDENTITY PRIMARY KEY NONCLUSTERED

,Name CHAR(50) NOT NULL

,Description CHAR(100) NOT NULL

)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

CREATE TABLE dbo.Child

(

ChildID INT IDENTITY PRIMARY KEY NONCLUSTERED

,ParentID INT NOT NULL FOREIGN KEY REFERENCES dbo.Parent (ParentID) INDEX IX_Child_ParentID

,Name CHAR(50) NOT NULL

,Description CHAR(100) NOT NULL

)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

Next, we attempt to create a natively compiled procedure that performs an INSERT to the Parent table, and tries to reference the key value we just inserted, with @@IDENTITY.

Scenario 1

CREATE OR ALTER PROCEDURE dbo.Proc_InsertParentAndChild

WITH NATIVE_COMPILATION, SCHEMABINDING

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'English')

INSERT dbo.Parent

(

Name

,Description

)

VALUES

(

'Parent1'

,'SomeDescription'

)

DECLARE @NewParentID INT

SELECT @NewParentID = SCOPE_IDENTITY()

INSERT dbo.Child

(

ParentID

,Name

,Description

)

VALUES

(

@NewParentID

,'Child1'

,'SomeDescription'

)

END

GO

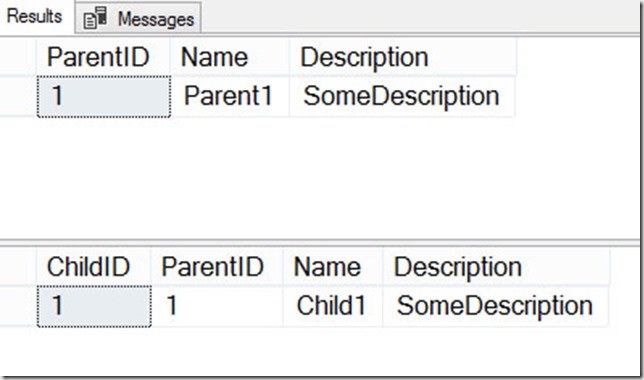

EXEC dbo.Proc_InsertParentAndChild

SELECT *

FROM Parent

ORDER BY ParentID

SELECT *

FROM Child

ORDER BY ParentID

GO

This works, but there are other approaches to solving this problem.

Next, we’ll try to DECLARE a table variable, and OUTPUT the new key value.

Scenario 2

CREATE OR ALTER PROCEDURE dbo.Proc_InsertParentAndChild

WITH NATIVE_COMPILATION, SCHEMABINDING

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'English')

DECLARE @NewParentID TABLE (ParentID INT NOT NULL)

INSERT dbo.Parent

(

Name

,Description

)

OUTPUT Inserted.ParentID INTO @NewParentID

/*

Msg 12305, Level 16, State 24, Procedure Proc_InsertParentAndChild, Line 7 [Batch Start Line 64]

Inline table variables are not supported with natively compiled modules.

*/

VALUES

(

'Parent1'

,'SomeDescription'

)

END

GO

But again we have issues with unsupported T-SQL.

Now we’ll try creating a memory-optimized table variable outside the native procedure, and then declare a variable of that type inside the native procedure.

Scenario 3

CREATE TYPE dbo.ID_Table AS TABLE

(

ParentID INT NOT NULL PRIMARY KEY NONCLUSTERED

)

WITH (MEMORY_OPTIMIZED = ON)

GO

CREATE OR ALTER PROCEDURE dbo.Proc_InsertParentAndChild

WITH NATIVE_COMPILATION, SCHEMABINDING

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'English')

DECLARE @NewParentID dbo.ID_Table

INSERT dbo.Parent

(

Name

,Description

)

OUTPUT Inserted.ParentID INTO @NewParentID

VALUES

(

'Parent1'

,'SomeDescription'

)

DECLARE @NewParentValue INT = (SELECT ParentID FROM @NewParentID)

INSERT dbo.Child

(

ParentID

,Name

,Description

)

VALUES

(

@NewParentValue

,'Child1'

,'SomeDescriptioin'

)

END

GO

This compiles, so now let’s test it.

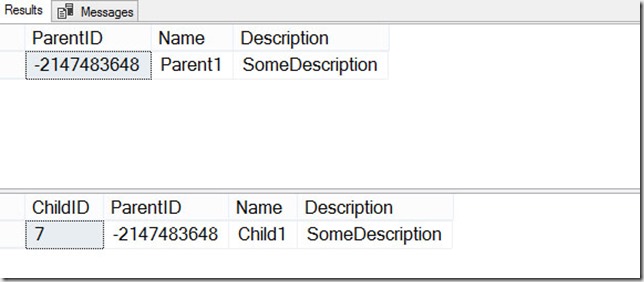

EXEC dbo.Proc_InsertParentAndChild SELECT * FROM Parent ORDER BY ParentID SELECT * FROM Child ORDER BY ParentID GO

This works great, but for completeness, we should test other possibilities.

This time, we’ll recreate the tables, but we’ll leave off the IDENTITY property for the Parent table. Instead of IDENTITY, we’ll create a SEQUENCE, and attempt to generate the next value within the native module.

Scenario 4

DROP PROCEDURE IF EXISTS dbo.Proc_InsertParentAndChild

go

DROP TABLE IF EXISTS dbo.Child

GO

DROP TABLE IF EXISTS dbo.Parent

GO

CREATE TABLE dbo.Parent

(

ParentID INT PRIMARY KEY NONCLUSTERED – no IDENTITY property used here!

,Name CHAR(50) NOT NULL

,Description CHAR(100) NOT NULL

)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

CREATE TABLE dbo.Child

(

ChildID INT IDENTITY PRIMARY KEY NONCLUSTERED

,ParentID INT NOT NULL FOREIGN KEY REFERENCES dbo.Parent (ParentID) INDEX IX_Child_ParentID

,Name CHAR(50) NOT NULL

,Description CHAR(100) NOT NULL

)

WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA)

GO

CREATE SEQUENCE dbo.ParentSequence AS INT

GO

CREATE OR ALTER PROCEDURE dbo.Proc_InsertParentAndChild

WITH NATIVE_COMPILATION, SCHEMABINDING

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'English')

DECLARE @NextParentSequence INT = NEXT VALUE FOR dbo.ParentSequence

INSERT dbo.Parent

(

ParentID

,Name

,Description

)

VALUES

(

@NextParentSequence

,'Parent1'

,'SomeDescription'

)

INSERT dbo.Child

(

ParentID

,Name

,Description

)

VALUES

(

@NextParentSequence

,'Child1'

,'SomeDescriptioin'

)

END

GO

/*

Msg 10794, Level 16, State 72, Procedure Proc_InsertParentAndChild, Line 19 [Batch Start Line 176]

The operator 'NEXT VALUE FOR' is not supported with natively compiled modules.

*/

But this fails, because as the error states, we can’t use NEXT VALUE FOR within native modules.

Scenario 5

How about if we generate the next value for the sequence outside the module, and pass that value?

Let’s see —

CREATE OR ALTER PROCEDURE dbo.Proc_InsertParentAndChild

(

@NewParentValue INT

)

WITH NATIVE_COMPILATION, SCHEMABINDING

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N'English')

INSERT dbo.Parent

(

ParentID

,Name

,Description

)

VALUES

(

@NewParentValue

,'Parent1' -- Name - char(50)

,'SomeDescription' -- Description - char(100)

)

INSERT dbo.Child

(

ParentID

,Name

,Description

)

VALUES

(

@NewParentValue

,'Child1'

,'SomeDescriptioin'

)

END

GO

SELECT *

FROM Parent

ORDER BY ParentID

SELECT *

FROM Child

ORDER BY ParentID

DECLARE @NextParentSequence INT

SELECT @NextParentSequence = NEXT VALUE FOR dbo.ParentSequence

EXEC dbo.Proc_InsertParentAndChild @NextParentSequence

SELECT *

FROM Parent

ORDER BY ParentID

SELECT *

FROM Child

ORDER BY ParentID

GO

This also works, so we’ll add it to our arsenal. But there’s one weird thing – the value that was inserted into the Parent table is –2147483647, which is probably not what we intended. So we’ll have to tidy up our SEQUENCE a bit.

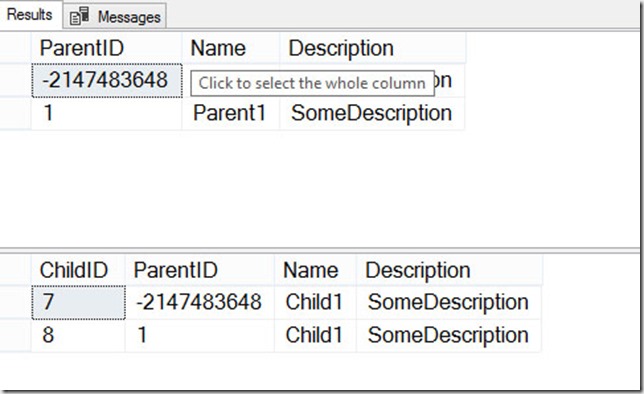

DROP SEQUENCE dbo.ParentSequence GO CREATE SEQUENCE dbo.ParentSequence AS INT START WITH 1 GO DECLARE @NextParentSequence INT SELECT @NextParentSequence = NEXT VALUE FOR dbo.ParentSequence EXEC dbo.Proc_InsertParentAndChild @NextParentSequence SELECT * FROM Parent ORDER BY ParentID SELECT * FROM Child ORDER BY ParentID

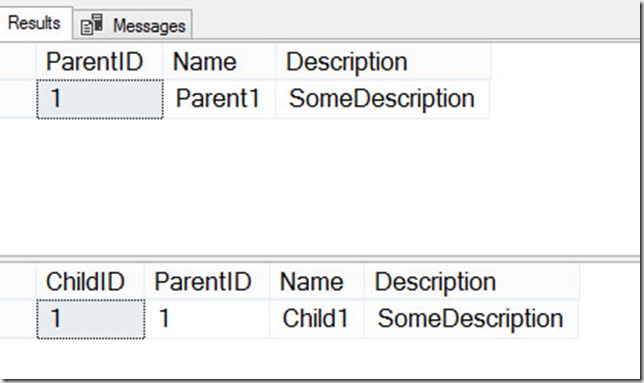

Everything looks good now:

In this post, we have verified three different ways to successfully insert into parent/child records, when using native compilation.