Earlier parts of this series can be found here:

Part 1: The Foundation

Part 2: Checkpoint File Pairs

Part 3: OOS (Out of Storage)

This post will cover memory requirements and usage, and what happens if you run actually reach OOM, also known as ”Out Of Memory”, a condition that strikes fear in the hearts of DBAs supporting memory-optimized databases. We’ll also cover CPU-bound conditions.

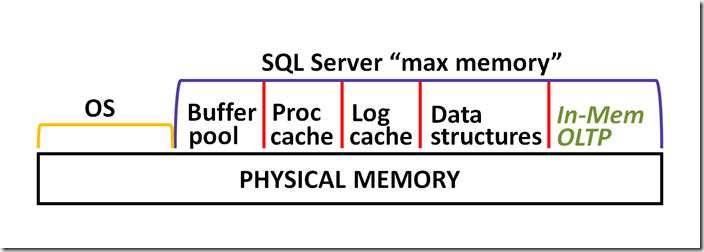

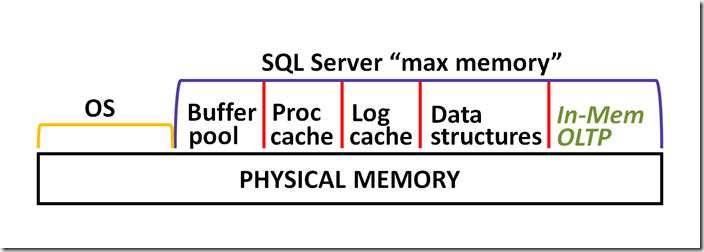

How memory is allocated to the In-Memory OLTP engine

At a high level, the memory that’s allocated to the In-Memory OLTP engine comes from the SQL Server ‘max memory’ setting, as does everything else within SQL Server. But beneath that level, we need to be aware of memory pools.

The pool that can be used for allocating memory to the In-Memory OLTP engine depends on which edition you are running:

- if you are running Enterprise Edition, you can use Resource Governor to configure a Resource Pool. Memory-optimized databases can be bound to separate pools, or multiple databases can be bound to a single pool. If you don’t bind a memory-optimized database to a pool created with Resource Governor, then all memory allocations for In-Memory OLTP for that database comes from the Default pool.

- if you are NOT running Enterprise Edition, all memory for In-Memory OLTP is allocated from the Default pool.

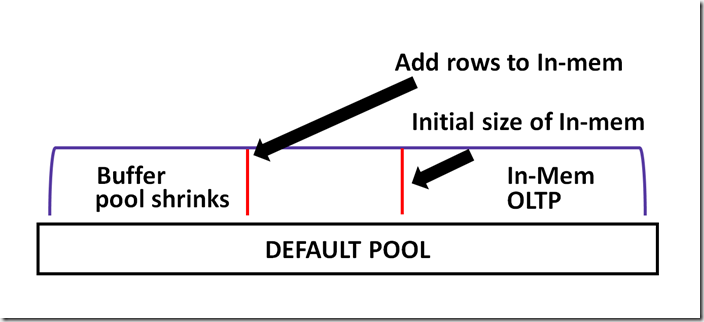

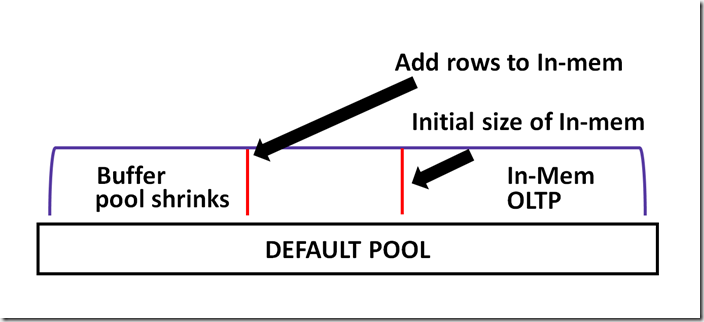

If using the Default pool, then as a result of deploying the In-Memory OLTP feature, there can be performance issues with on-disk workloads.

The following image shows that as we add rows to memory-optimized tables – and put pressure on the buffer pool – the buffer pool responds by shrinking, and that can affect disk-based workloads. If we then delete rows from memory-optimized tables, the buffer pool can expand. But what if we don’t delete rows from memory-optimized tables? Then the buffer pool will stay in its reduced state (or shrink even more), and that can cause problems due to buffer churn (continually having to do physical I/Os to retrieve pages from storage, for disk-based workloads).

Astute readers will consider using Buffer Pool Extensions (BPE), which is available in Standard Edition only. Yes, you could do that, but BPE retrieves a single 8K page at a time, and can actually make performance worse. And in case you’re wondering, no, it’s not possible to compress memory-optimized data that’s stored in memory. Think Windows will actually page out any of the memory allocated to In-Memory OLTP? That’s simply not possible.

Resource Governor

If you are running Enterprise Edition, then this problem gets solved by creating a resource pool. Now, to be clear, that doesn’t mean you can’t run out of memory for memory-optimized objects. It only means that your In-Memory workload can’t affect the on-disk workload, unless of course you configure the resource pool incorrectly. I’ve got a blog post on how to monitor resource pools here.

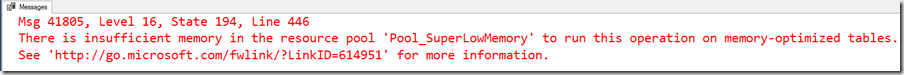

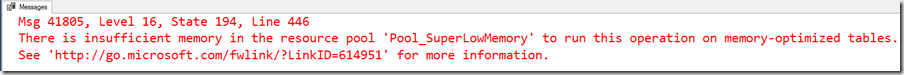

Let’s create a resource pool, with an artificially low upper bound, and insert rows until we hit the limit.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

<font size="3">CREATE RESOURCE POOL Pool_SuperLowMemory WITH ( MIN_MEMORY_PERCENT = 1 ,MAX_MEMORY_PERCENT = 1 ); GO ALTER RESOURCE GOVERNOR RECONFIGURE; GO — bind the database to the resource pool EXEC sp_xtp_bind_db_resource_pool ‘OOM_DB’, ‘Pool_SuperLowMemory’; GO USE master; GO ALTER DATABASE [OOM_DB] SET SINGLE_USER WITH ROLLBACK IMMEDIATE; ALTER DATABASE [OOM_DB] SET OFFLINE; GO ALTER DATABASE [OOM_DB] SET ONLINE; ALTER DATABASE [OOM_DB] SET MULTI_USER WITH ROLLBACK IMMEDIATE; GO USE OOM_DB; GO SET NOCOUNT ON; DECLARE @stringVal VARCHAR(8000) = REPLICATE(‘A’, 8000) INSERT dbo.InMemTable1 ( col01, col02, col03, col04, col05, col06, col07, col08, col09, col10,col11, col12, col13, col14,col15, col16, col17, col18, col19, col20 ) VALUES ( @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal ,@stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal, @stringVal ,@stringVal, @stringVal ) GO 1000</font> |

On my server, I was able to INSERT 305 rows before the pool ran out of memory, and receiving error 41805:

Causes of OOM

What can cause a memory-optimized database to run out of memory? It could be that resource consumption (memory) exceeded:

- the relevant percentage of committed_target_kb from the sys.dm_os_sys_info DMV (explained in a moment)

- MAX_MEMORY_PERCENT value of a Resource Pool that the database is bound to (if running Enterprise Edition and using Resource Governor)

or:

- garbage collection is not operational (the purpose of GC is to reclaim memory consumed by stale row versions)

- updates to memory-optimized table variables caused row versions to be created, and because GC does not operate on table variables, you ran out of memory (for table variables that have a very large amount of rows)

The only thing that can prevent GC from working is a long running transaction.

committed_target_kb

We are supposed to base our belief of how much memory is available for our memory-optimized databases, upon committed_target_kb from the sys.dm_os_sys_info DMV. Memory available for In-Memory OLTP is expressed as a percentage of committed_target_kb, based on total system memory, which is detailed here. Prior to SQL 2016/SP1, the In-Memory OLTP feature was only supported on Enterprise Edition, and the amount of memory allocated to SQL Server was limited to what the operating system supported.

But in a post-SQL 2016/SP1 world, things are different, because the In-Memory OLTP feature is now supported on non-enterprise editions. This means that people will start deploying In-Memory OLTP on servers with a lot less memory than is possible with Enterprise, and therein lies a potential issue.

The problem is that committed_target_kb is a moving target.

From the documentation:

Applies to: SQL Server 2012 through SQL Server 2017.

Represents the amount of memory, in kilobytes (KB), that can be consumed by SQL Server memory manager. The target amount is calculated using a variety of inputs like:

– the current state of the system including its load

– the memory requested by current processes

– the amount of memory installed on the computer

– configuration parameters

If committed_target_kb is larger than committed_kb, the memory manager will try to obtain additional memory. If committed_target_kb is smaller than committed_kb, the memory manager will try to shrink the amount of memory committed. The committed_target_kb always includes stolen and reserved memory.

Those parts about “the current state of the system including its load” and “the memory requested by current processes” concern me. If there is x amount of memory available on a server, and you check the value of committed_target_kb when the server is “at rest”, then under load there might in fact be much less memory available. I believe this is one of the main causes of OOM for memory-optimized workloads, especially when people do a POC on under-provisioned machines (like laptops).

Database restore and recovery

The process of recovering a database is different for databases with durable memory-optimized data.

Step 1: the backup file is read, and the various types of of files are created. For example, all MD/NDF/LDF and data and delta files are created.

Step 2: data is copied from the backup into the files created in Step 1. If you restore a database WITH NORECOVERY, you have completed both Step 1 and Step 2

Step 3: For databases with durable memory-optimized data, there is one additional step, and that’s to stream data from the Checkpoint File Pairs (data/delta files) back into memory

It should be noted that if the backup contains both on-disk and memory-optimized tables, none of the on-disk data is available until all of the memory-optimized data has finished streaming. When restoring a backup – whether the database has memory-optimized data or not – the process short-circuits if there isn’t enough free space to create the files in Step 1. Unfortunately, no such validation of available memory is done for Step 3. That means you can spend a long time creating files on disk, then spend an additional lengthy amount of time streaming data to memory, only to find that you don’t have enough memory. If you think Microsoft should change this, please upvote my Connect item.

When data is streamed into memory, the wait type will be WAIT_XTP_RECOVERY.

The unwary DBA would logically think that the only time you can see WAIT_XTP_RECOVERY is when actually restoring a database with memory-optimized data, but unfortunately that’s not correct. The Microsoft documentation doesn’t list all of the possible “recovery events” that can cause restreaming, but through my own testing, I’ve come with the following list:

setting a database:

- OFFLINE

- READ_ONLY when it was READ_WRITE

- READ_WRITE when it was READ_ONLY

Also, setting Read Committed Snapshot Isolation ON or OFF, will cause restreaming.

Additionally, the speed of restreaming is directly influenced by the number of volumes that you have created containers on, and the IOPS available from those volumes.

Potential solutions to OOM

- Open a DAC (Dedicated Admin Connection). Then delete rows, and/or move data from memory to disk.

- Increase system memory

- If Garbage Collection for row versions is not operational (due to long running transactions), clear up those long-running transactions so that GC can proceed

If you attempt to move data from memory-optimized tables to disk-based tables, i.e. using SELECT INTO, please note that it’s possible to create schema for memory-optimized tables that you can’t simply migrate to disk.

For example, the following CREATE TABLE is perfectly legal for memory-optimized tables, but will fail for disk-based tables (and also fails if using SELECT * INTO on-disktable FROM in-memtable):

|

|

<font size="3">CREATE TABLE [dbo].[T1] ( [PKcol] [INT] IDENTITY(1, 1) NOT NULL ,[col2] CHAR(5000) NOT NULL ,[col3] CHAR(5000) NOT NULL ,[col4] CHAR(5000) NOT NULL ,[col5] CHAR(5000) NOT NULL ,[col6] CHAR(5000) NOT NULL ,[col7] CHAR(5000) NOT NULL PRIMARY KEY NONCLUSTERED ) WITH (MEMORY_OPTIMIZED = ON, DURABILITY = SCHEMA_AND_DATA);</font> |

The ability to create tables like this is detailed at this link, with the relevant section being:

“…you can have a memory-optimized table with a row size > 8060 bytes, even when no column in the table uses a LOB type. There is no run-time limitation on the size of rows or the data in individual columns; this is part of the table definition.”

What happens if you hit OOM

So how does hitting OOM affect workloads for memory-optimized databases?

SELECT still works, and also DELETE and DROP, but of course INSERT and UPDATE will fail.

CPU bound

Last but not least, I wanted to touch on potential CPU issues for memory-optimized databases. Database recovery can be CPU bound under the following circumstances:

- many indexes on large memory-optimized tables (2014, 2016)

- too many LOB columns (2016+)

- incorrect bucket count set for HASH indexes (2014, 2016, 2017)

The first item in this list, “many indexes on large memory-optimized tables (2014, 2016)” has supposedly been addressed in SQL 2017.

LOB columns are actually stored as separate memory-optimized tables, and as noted by Dmitri Korotkevitch (blog) in this post, can impact performance.

The “incorrect bucket size for HASH indexes” issue persists to this day. If the bucket count is too low, there will be many sets of key columns that hash to the same value, increasing the chain length, and having not only a terrible effect on performance in general, but database recovery in particular.

Wrapping up

Hopefully this mini-series about resource consumption for memory-optimized workloads has given you a clear understanding of why Microsoft recommends the following:

- 2x data set in memory for starting memory allocation (only for In-Memory, does not include memory for on-disk workload)

- 3x workload IOPS from disks where containers are stored (handles operational workload plus read/write File Merge workload)

- 4x durable memory-optimized data size for initial storage footprint

These are rough guides, but should be observed at first, and then tuned as required.

This concludes the series on resources issues for In-Memory OLTP.

![image_thumb[389]_thumb image_thumb[389]_thumb](http://nedotter.com/wp-content/uploads/2017/11/image_thumb389_thumb_thumb.png)

![image_thumb[26] image_thumb[26]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb26_thumb.png)

![image_thumb[29] image_thumb[29]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb29_thumb.png)

![image_thumb[31] image_thumb[31]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb31_thumb.png)

![image_thumb[33] image_thumb[33]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb33_thumb.png)