Transactional replication hasn’t changed much since it was re-written for SQL 2005. However, with the release of SQL 2014 , there is at least one new possibility: memory-optimized tables at the subscriber.

With the release of SQL 2016, some of the restrictions for memory-optimized subscriber tables have been lifted:

- snapshot schema files that create the memory-optimized tables no longer have to be manually modified (but see “Gotcha #6, silent schema killer” below)

- tables replicated to memory-optimized tables on a subscriber are no longer limited to the SQL 2014 max row length of 8060 bytes for memory-optimized tables. This seems sort of moot, because published tables cannot themselves be memory-optimized, and are therefore still restricted to 8060 bytes. However, if for some reason you needed to add a lot of columns to the subscriber table that cause it to be greater than 8060 bytes, you can do it. Note that there is no limit on how large a row can be for memory-optimized tables in SQL 2016. The following statement is perfectly valid:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

CREATE TABLE [dbo].[T01] ( [PKcol] [INT] IDENTITY(1, 1) NOT NULL ,[col2] CHAR(5000) NOT NULL ,[col3] CHAR(5000) NOT NULL ,[col4] CHAR(5000) NOT NULL ,[col5] CHAR(5000) NOT NULL ,[col6] CHAR(5000) NOT NULL ,[col7] CHAR(5000) NOT NULL PRIMARY KEY NONCLUSTERED HASH ( [PKcol] ) WITH (BUCKET_COUNT = 1000) ) WITH (MEMORY_OPTIMIZED = ON , DURABILITY = SCHEMA_AND_DATA) |

Why would you want to use memory-optimized tables in a subscriber database? There can only be one answer: speed.

Subscriber latency due to data volume could be a result of the following, in combination with each other, or individually:

- excessive logging – changes to indexes are not logged for memory-optimized tables, and in general logging is much more efficient than for traditional/on-disk tables

- locking – no locks are taken for DML statements that touch memory-optimized tables

- blocking – blocking as a result of a transaction making changes to rows is not possible for memory-optimized tables

- latching – no latches are taken on memory-optimized tables

The design of the In-Memory OLTP engine can alleviate latency due to these issues – BUT – before you start jumping for joy, you’ll need to be aware of the impact of deploying In-Memory OLTP in general.

DBAs love to tune things (indexes, queries, etc.), and subscriber tables are no exception. Until SQL 2014, when memory-optimized subscriber tables were introduced, some of the things that DBAs tuned on the subscriber included:

- compression settings

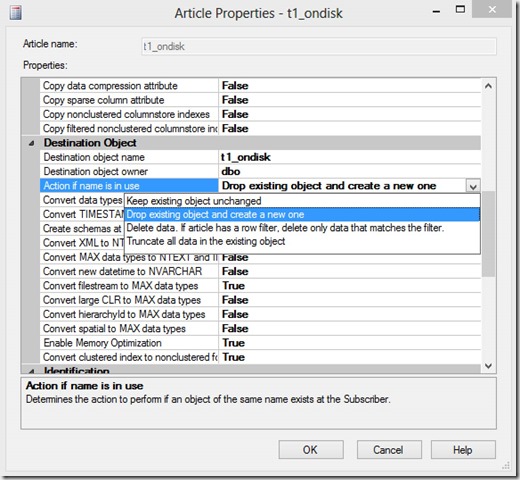

- different ways that the data in subscriber tables can be reinitialized, i.e. TRUNCATE TABLE, DELETE, DROP/CREATE table, or do nothing (these choices are for the ‘Action if name is in use’ section of the ‘Destination Object’, see the next screen shot).

- custom indexes

- snapshot isolation

For reinitializing, being able to use TRUNCATE TABLE is a great benefit, because all custom indexes and compression settings are retained for the destination table. If you choose drop/create, all compression settings and custom indexing must be reapplied to the subscriber table upon (re)initialization.

Deployment considerations

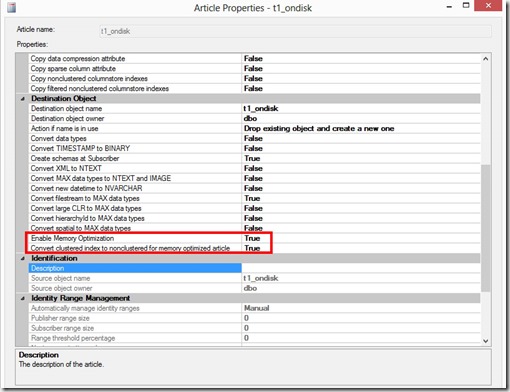

Article properties

On the dialog for Article Properties, you’ll need to make sure that both “Enable Memory Optimization” and “Convert clustered index to nonclustered index for memory optimized article” are set to “True”. Despite what you might have read, there is no concept of a “clustered” index for a memory-optimized table. If you have a clustered index on the published table, the DDL will fail when applied on the subscriber unless you set this option.

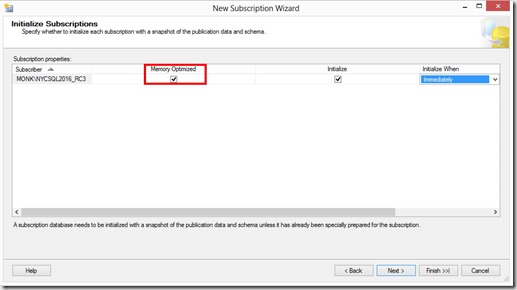

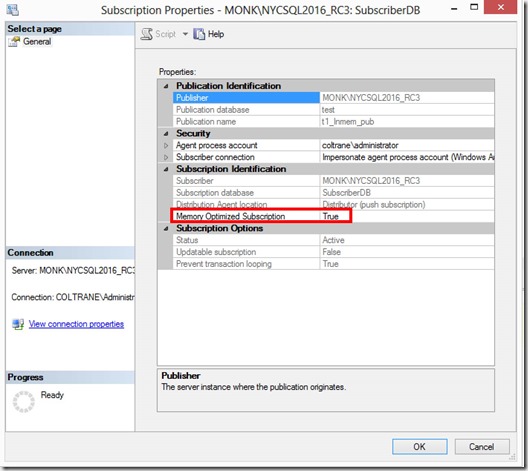

Subscription Properties

The Subscription Properties can be configured when initially creating the subscription:

or from the Subscription Properties dialog, if the subscription already exists:

Gotcha #1, DML support

Reinitialization is likely to happen at some point in the future, and so we’ll need to make the correct choice for “Action if name is in use”, on the same Article Properties dialog.

TRUNCATE TABLE is not supported for memory-optimized tables. If the table must be dropped, you’ll have to reapply scripts to handle any subscriber-level customization.

Gotcha #2, compression

On-disk tables are stored in pages. Memory-optimized tables are not stored in pages, and therefore don’t support any form of compression (columnstore indexes on memory-optimized tables create a separate compressed copy of the rows, but the primary data source remains the rows in memory).

Gotcha #3, potential WRITELOG bottleneck

All DML operations on durable memory-optimized tables are fully logged, regardless of database-level recovery settings (for more details, see my post on “Optimizing Data Load” here). If deploying In-Memory OLTP solves the latency issues your app was experiencing, WRITELOG is likely to become one of the top waits. This prevents realizing the full potential of deploying In-Memory OLTP, but fear not – as of SQL 2016/SP1, NVDIMM is supported for the transaction log, reducing/eliminating the log as a performance bottleneck. See the link here for more detail.

Gotcha #4, impact on RTO

If by chance you must restore a subscriber database that contains a lot of durable memory-optimized data (I realize that “a lot” is subjective), RTO will be affected. That’s because the number and placement of containers has a significant effect on the amount of time required to recover a database that contains durable memory-optimized data. See my post “In-Memory OLTP: The moving target that is RTO” here for more details. You might also be interested in “Backup and Recovery for SQL Server databases that contain durable memory-optimized data” here.

Gotcha #5, resource consumption

Updates on memory-optimized tables are performed as DELETE + INSERT, and INSERTs create row versions, and the newly inserted row becomes the current version. Older versions consume additional memory, and must be retained as long as any processes that reference them are still executing (like queries running on the subscriber). It’s possible to have long chains of versioned rows, and that means your environment might require additional memory. For a detailed explanation of row versioning, including the Garbage Collection process, see my post on “Row version lifecycle for In-Memory OLTP” here. There are additional considerations if your workload uses memory-optimized table variables (also detailed in that post).

Gotcha #6, silent schema killer

Let’s say you’ve done you’re homework, and that your configuration for memory-optimized subscriber tables is perfect. There is additional database configuration that must be done to support memory-optimized tables, and without that, your subscriber tables will not be initialized/reinitialized as memory-optimized (they’ll still be created on the subscriber, but will be traditional/on-disk tables). In the stored procedure that executes on the subscriber, there is validation to determine if there is a memory-optimized filegroup for the subscriber database (there are other conditions, but this is the one we’re interested in).

|

1 |

IF NOT EXISTS(select top 1 1 from sys.filegroups FG where type = 'FX') |

If you lookup the definition of sys.filegroups, it relates to sys.data_spaces, and there we see a column named “type” that can have the following values:

FG = Filegroup

FD = FILESTREAM data filegroup

FX = Memory-optimized tables filegroup

PS = Partition scheme

If the query finds a filegroup of type “FX”, the table is created as memory-optimized, and if not (along with some other conditions), it’s created as a traditional/on-disk table.

While it seems obvious that you should have already configured your database to have a memory-optimized filegroup, if you skipped that step, there is no warning, error, or other type of message received, stating that the subscriber database is not memory-optimized. Of course, simply having a memory-optimized filegroup is not enough to create memory-optimized tables, because you must also have containers that belong to that memory-optimized filegroup. The “memory-optimized filegroup exists” validation will pass, but the (re)initialization will fail because no containers exist, and you’ll receive an error about that.

Index limitations

As of this writing (SQL 2016, SP1), a memory-optimized table can have a maximum of 9 indexes (if one of them is a columnstore index). That may or may not be an issue for your environment, but it’s a much lower number than traditional/on-disk tables.

Stored procedure execution

A quick review of Interop vs. Native Compilation:

- Interop – interpreted TSQL as existed prior to SQL 2014. The full TSQL surface area is available with interop mode, and you can access both on-disk and memory-optimized tables.

- Native Compilation – for maximum speed, you’ll want to use natively compiled stored procedures. There are restrictions for natively compiled modules, the most significant being that they can only reference memory-optimized tables, and the full TSQL surface area is not available. As of SQL 2016/SP1, natively compiled modules don’t support CASE statements, views, and there many other restrictions. For more details, check “Transact-SQL Constructs Not Supported by In-Memory OLTP” here.

If you execute an UPDATE or DELETE that affects a large number of rows, then that statement is turned into individual UPDATE or DELETE statements that are sent to the distributor, and finally to the subscriber(s). To avoid the overhead of sending all those changes, it’s possible to publish the “execution” of a stored procedure. The documentation says: “..only the procedure execution is replicated, bypassing the need to replicate the individual changes for each row..” Please refer to the document about replicating stored procedure execution here.

The documentation also states that you can customize the stored procedure on the subscriber. Although the documentation doesn’t mention it, the stored procedure can be natively compiled, which should greatly increase performance on the subscriber for transactions that affect a large number of rows. Keep in mind that any changes made to the procedure at the publisher are sent to the subscriber. If this isn’t the behavior you want, disable the propagation of schema changes before executing ALTER PROCEDURE.

IDENTITY crisis

You’ll likely be disappointed with native compilation if you’re trying to INSERT many rows at the subscriber, and the destination table includes an IDENTITY column. That’s because it’s not possible to insert a row that has an IDENTITY column in a natively compiled stored procedure. Even if you SET IDENTITY_INSERT on before calling the procedure, the insert still fails with: “The function ‘setidentity’ is not supported with natively compiled modules.”

Custom stored procedures

There is a difference between “replicating stored procedure execution”, and using “custom stored procedures”. Microsoft does not support anything you might create as a “custom stored procedure”, whether or not it’s natively compiled.

Please check the documentation here.

Wrapping up

In-Memory OLTP is steadily making its way into the full feature set offered by SQL Server. If you’re running SQL 2016 SP1, In-Memory OLTP is now included with all editions of SQL 2016, except LocalDB.

Pingback: SQL Server Transaction Replication Setup Issue With In Memory Table – Mags Forum Technology