/*

Ned Otter

www.NedOtter.com

21 June 2017

THIS CODE AND INFORMATION ARE PROVIDED "AS IS" WITHOUT WARRANTY OF

ANY KIND, EITHER EXPRESSED OR IMPLIED, INCLUDING BUT NOT LIMITED

TO THE IMPLIED WARRANTIES OF MERCHANTABILITY AND/OR FITNESS FOR A

PARTICULAR PURPOSE.

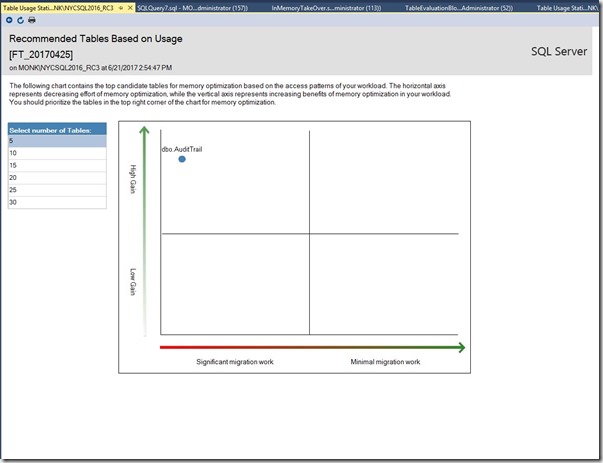

In-Memory OLTP can improve workload performance for highly concurrent, write-intensive workloads.

This script attempts to determine the locking/latching characteristics, and migration difficulty.

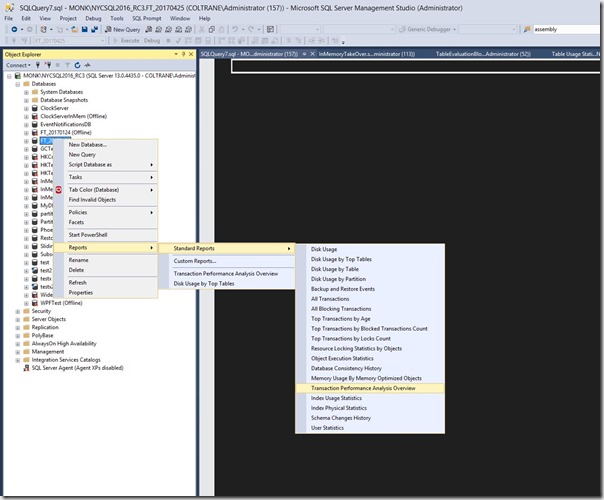

This script is my version of the code that's generated when you run the

"Transaction Performance Analysis Overview Report" for tables. In its original form,

that script counts the number of conditions that might affect migration, giving them all equal weight.

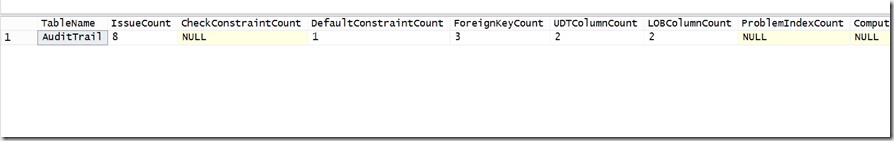

But migrating a user-defined-datatype will be very easy, and migrating foreign keys could be very difficult.

The second result set breaks the issues into separate buckets, so you can more easily decide whether or not a table

is a good candidate for migration.

It does not attempt to determine anything about tempdb contention, which In-Memory can also help with,

and it does not attempt to determine which objects might benefit from Native Compilation.

Instructions:

Step 1:

Execute the first query, so you can determine which tables have high levels of latch/lock activity.

NOTE: The results of the first query will be meaningless, unless you execute it in production, or on a test

system that has recent production-like activity.

Step 2:

Based on the output of the first query, adjust the @table_id for the second query.

*/

/*

Question 1: How much locking/latching does this table have?

*/

/***************************************************

Performance statistics, specifically latch/lock

***************************************************/

DECLARE @table_id INT --= OBJECT_ID('dbo.YourTable');

DECLARE @sum_table TABLE

(

database_id INT NOT NULL

,page_latch_lock_wait_sum INT NULL

);

INSERT INTO @sum_table

SELECT i.database_id

,ISNULL(NULLIF(SUM(i.page_latch_wait_in_ms) + SUM(i.page_lock_wait_in_ms), 0), 1) AS page_latch_lock_wait_sum

FROM sys.dm_db_index_operational_stats(DB_ID(), NULL, NULL, NULL) i

WHERE i.object_id IN (SELECT object_id FROM sys.tables)

OR @table_id IS NULL

GROUP BY i.database_id;

SELECT SCHEMA_NAME(t.schema_id) AS schema_name

,t.name AS table_name

,SUM(i.page_latch_wait_count) AS page_latch_wait_count

,SUM(i.page_lock_count) AS page_lock_count

,SUM(i.page_lock_wait_count) AS page_lock_wait_count

,SUM(ROUND(CAST(i.page_latch_wait_in_ms AS FLOAT) / CAST((CASE i.page_latch_wait_count WHEN 0 THEN 1 ELSE i.page_latch_wait_count END) AS FLOAT), 2)) AS avg_time_per_page_latch_wait

,SUM(ROUND(CAST(i.page_lock_wait_in_ms AS FLOAT) / CAST((CASE i.page_lock_wait_count WHEN 0 THEN 1 ELSE i.page_lock_wait_count END) AS FLOAT), 2)) AS avg_time_per_page_lock_wait

,SUM(CAST(ROUND((page_latch_wait_in_ms + page_lock_wait_in_ms) * 100.0 / st.page_latch_lock_wait_sum, 2) AS DECIMAL(5, 2))) AS percentage_of_wait_for_entire_db

FROM sys.dm_db_index_operational_stats(DB_ID(), NULL, NULL, NULL) i

INNER JOIN sys.tables t ON t.object_id = i.object_id

INNER JOIN sys.indexes ix ON ix.object_id = i.object_id

AND ix.index_id = i.index_id

INNER JOIN @sum_table st ON st.database_id = i.database_id

WHERE t.object_id = @table_id

OR @table_id IS NULL

GROUP BY t.schema_id

,t.object_id

,t.name

ORDER BY page_latch_wait_count DESC;

GO

/*

Evaluation

Question 2: How difficult will the migration be?

******************************

supported without any issues

******************************

nullable columns

LOB data types, i.e NVARCHAR(MAX)

unique indexes/constraints

******************************

supported with varying degrees of migration difficulty

******************************

default constraints -- easy

check constraints -- easy

UDT -- easy

foreign keys -- potentially difficult

triggers -- potentially difficult/impossible

index with type > 2 -- might not be supported, i.e. XML, Spatial

0 = Heap

1 = Clustered

2 = Nonclustered

3 = XML

4 = Spatial

5 = Clustered columnstore index. Applies to: SQL Server 2014 through SQL Server 2017.

6 = Nonclustered columnstore index. Applies to: SQL Server 2012 through SQL Server 2017.

7 = Nonclustered hash index. Applies to: SQL Server 2014 through SQL Server 2017.

******************************

unsupported/blocking

******************************

computed columns (SQL 2017+ supports computed

sparse columns

IDENTITY <> 1, 1

assembly

unsupported data types, i.e. DATETIMEOFFSET

filestream columns

*/

DECLARE @table_id INT --= OBJECT_ID('dbo.YourTable');

DECLARE @MemoryOptimizedTables TABLE

(

object_id INT NOT NULL

);

INSERT INTO @MemoryOptimizedTables

SELECT [object_id]

FROM sys.tables

WHERE [is_memory_optimized] = 1;

DECLARE @Tables TABLE

(

[table_id] INT NOT NULL

,[CheckConstraintCount] INT NULL

,[DefaultConstraintCount] INT NULL

,[ForeignKeyCount] INT NULL

,[ProblemIndexCount] INT NULL

,[ComputedColumnCount] INT NULL

,[IdentityViolations] INT NULL

,[UnsupportedDataTypeCount] INT NULL

,[FilestreamColumnCount] INT NULL

,[LOBColumnCount] INT NULL

,[AssemblyTypeCount] INT NULL

,[UDTColumnCount] INT NULL

,[SparseColumnCount] INT NULL

,[TriggerCount] INT NULL

);

INSERT @Tables

(

table_id

)

SELECT [object_id]

FROM sys.tables

WHERE [is_memory_optimized] = 0

AND @table_id IS NULL OR [object_id] = @table_id;

DECLARE @DefaultContraintTables TABLE

(

parent_object_id INT NOT NULL

);

INSERT @DefaultContraintTables

SELECT [parent_object_id]

FROM sys.default_constraints;

WITH CheckConstraints

AS

(

SELECT t.parent_object_id AS [table_id]

,COUNT(*) AS CountRows

FROM sys.check_constraints t

JOIN sys.tables tbl ON tbl.object_id = t.parent_object_id

WHERE tbl.is_ms_shipped = 0

AND OBJECT_NAME(t.parent_object_id, DB_ID()) IS NOT NULL

AND t.parent_object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.parent_object_id

)

UPDATE @Tables

SET [CheckConstraintCount] = CheckConstraints.CountRows

FROM @Tables AS Tables

INNER JOIN CheckConstraints ON CheckConstraints.table_id = Tables.table_id;

WITH DefaultConstraints

AS

(

SELECT t.parent_object_id AS [table_id]

,COUNT(*) AS CountRows

FROM sys.default_constraints t

INNER JOIN sys.tables tbl ON tbl.object_id = t.parent_object_id

WHERE tbl.is_ms_shipped = 0

AND OBJECT_NAME(t.parent_object_id, DB_ID()) IS NOT NULL

AND t.parent_object_id IN (SELECT * FROM @DefaultContraintTables)

AND t.parent_object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.parent_object_id

)

UPDATE @Tables

SET DefaultConstraintCount = DefaultConstraints.CountRows

FROM @Tables AS Tables

INNER JOIN DefaultConstraints ON DefaultConstraints.table_id = Tables.table_id;

WITH ForeignKeys

AS

(

SELECT t.parent_object_id AS [table_id]

,COUNT(*) AS CountRows

FROM sys.foreign_keys t

INNER JOIN sys.tables tbl ON tbl.object_id = t.parent_object_id

WHERE tbl.is_ms_shipped = 0

AND OBJECT_NAME(t.parent_object_id, DB_ID()) IS NOT NULL

AND t.parent_object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.parent_object_id

)

UPDATE @Tables

SET ForeignKeyCount = ForeignKeys.CountRows

FROM @Tables AS Tables

INNER JOIN ForeignKeys ON ForeignKeys.table_id = Tables.table_id

/*

Type of index:

0 = Heap

1 = Clustered

2 = Nonclustered

3 = XML

4 = Spatial

5 = Clustered columnstore index. Applies to: SQL Server 2014 through SQL Server 2017.

6 = Nonclustered columnstore index. Applies to: SQL Server 2012 through SQL Server 2017.

7 = Nonclustered hash index. Applies to: SQL Server 2014 through SQL Server 2017.

*/

;

WITH Indexes

AS

(

SELECT t.object_id AS [table_id]

,COUNT(tbl.object_id) AS CountRows

FROM sys.indexes t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

WHERE tbl.is_ms_shipped = 0

AND OBJECT_NAME(t.object_id, DB_ID()) IS NOT NULL

--AND (((t.is_unique = 1 OR t.is_unique_constraint = 1) AND t.is_primary_key = 0) OR

--AND t.type > 2

AND t.type NOT IN (0, 1, 2, 5) -- clustered columnstore is supported for in-memory, but it's very different.

AND t.object_id NOT IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET ProblemIndexCount = Indexes.CountRows

FROM @Tables AS Tables

INNER JOIN Indexes ON Indexes.table_id = Tables.table_id;

-- nullable columns are supported....

-- SELECT

--t.object_id AS [table_id],

-- COUNT(*) AS CountRows

-- FROM sys.index_columns ic

-- JOIN sys.tables t ON t.object_id = ic.object_id

-- JOIN sys.all_columns c ON ic.column_id = c.column_id AND t.object_id = c.object_id

-- WHERE t.is_ms_shipped = 0 AND c.is_nullable = 1

-- UNION

WITH Triggers

AS

(

SELECT tb.object_id AS [table_id]

,COUNT(tr.object_id) AS CountRows

FROM sys.triggers tr

INNER JOIN sys.tables tb ON tr.parent_id = tb.object_id

WHERE tr.is_ms_shipped = 0

AND tr.parent_class = 1

AND tb.object_id IN (SELECT table_id FROM @Tables)

GROUP BY tb.object_id

)

UPDATE @Tables

SET TriggerCount = Triggers.CountRows

FROM @Tables AS Tables

INNER JOIN Triggers ON Triggers.table_id = Tables.table_id;

/*

NOTE:

If migrating to SQL 2017, computed columns are supported.

*/

WITH ComputedColumns

AS

(

SELECT t.object_id AS [table_id]

,COUNT(t.object_id) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

WHERE tbl.is_ms_shipped = 0

AND (t.is_computed = 1)

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET ComputedColumnCount = ComputedColumns.CountRows

FROM @Tables AS Tables

INNER JOIN ComputedColumns ON ComputedColumns.table_id = Tables.table_id;

WITH SparseColumns

AS

(

SELECT t.object_id AS [table_id]

,COUNT(t.object_id) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

WHERE tbl.is_ms_shipped = 0

AND (t.is_sparse = 1)

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET SparseColumnCount = SparseColumns.CountRows

FROM @Tables AS Tables

INNER JOIN SparseColumns ON SparseColumns.table_id = Tables.table_id;

WITH IdentityViolation

AS

(

SELECT t.object_id AS [table_id]

,COUNT(*) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

LEFT JOIN sys.identity_columns ic ON t.object_id = ic.object_id

AND t.column_id = ic.column_id

WHERE tbl.is_ms_shipped = 0

AND

(

t.is_identity = 1

AND (ic.increment_value != 1 OR ic.seed_value != 1)

)

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET IdentityViolations = IdentityViolation.CountRows

FROM @Tables AS Tables

INNER JOIN IdentityViolation ON IdentityViolation.table_id = Tables.table_id;

WITH UDTColumns

AS

(

SELECT t.object_id AS [table_id]

,COUNT(t.object_id) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

WHERE tbl.is_ms_shipped = 0

AND t.user_type_id != t.system_type_id

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE

@Tables

SET

UDTColumnCount = UDTColumns.CountRows

FROM @Tables AS Tables

INNER JOIN UDTColumns ON UDTColumns.table_id = Tables.table_id;

WITH AssemblyTypes

AS

(

SELECT t.object_id AS [table_id]

,COUNT(t.object_id) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

WHERE tbl.is_ms_shipped = 0

AND tp.is_assembly_type = 1

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET AssemblyTypeCount = AssemblyTypes.CountRows

FROM @Tables AS Tables

INNER JOIN AssemblyTypes ON AssemblyTypes.table_id = Tables.table_id;

WITH UnsupportedDataTypes

AS

(

SELECT t.object_id AS [table_id]

,COUNT(t.object_id) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

WHERE tbl.is_ms_shipped = 0

AND UPPER(tp.name) IN ( 'DATETIMEOFFSET', 'GEOGRAPHY', 'GEOMETRY', 'SQL_VARIANT', 'HIERARCHYID', 'XML', 'IMAGE', 'XML', 'TEXT', 'NTEXT', 'TIMESTAMP' )

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET [UnsupportedDataTypeCount] = UnsupportedDataTypes.CountRows

FROM @Tables AS Tables

INNER JOIN UnsupportedDataTypes ON UnsupportedDataTypes.table_id = Tables.table_id;

WITH FileStream

AS

(

SELECT t.object_id AS [table_id]

,COUNT(t.object_id) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

WHERE tbl.is_ms_shipped = 0

AND t.is_filestream = 1

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET FilestreamColumnCount = FileStream.CountRows

FROM @Tables AS Tables

INNER JOIN FileStream ON FileStream.table_id = Tables.table_id;

WITH LOBColumns

AS

(

SELECT t.object_id AS [table_id]

,COUNT(t.object_id) AS CountRows

FROM sys.all_columns t

INNER JOIN sys.tables tbl ON tbl.object_id = t.object_id

INNER JOIN sys.types tp ON t.user_type_id = tp.user_type_id

WHERE tbl.is_ms_shipped = 0

AND t.max_length = -1

AND t.object_id IN (SELECT table_id FROM @Tables)

GROUP BY t.object_id

)

UPDATE @Tables

SET LOBColumnCount = LOBColumns.CountRows

FROM @Tables AS Tables

INNER JOIN LOBColumns ON LOBColumns.table_id = Tables.table_id;

SELECT

OBJECT_NAME(table_id) AS TableName

,IIF(CheckConstraintCount IS NULL, 0, CheckConstraintCount) +

IIF(DefaultConstraintCount IS NULL, 0, DefaultConstraintCount) +

IIF(ForeignKeyCount IS NULL, 0, ForeignKeyCount) +

IIF(ProblemIndexCount IS NULL, 0, ProblemIndexCount) +

IIF(ComputedColumnCount IS NULL, 0, ComputedColumnCount) +

IIF(IdentityViolations IS NULL, 0, IdentityViolations) +

IIF(UnsupportedDataTypeCount IS NULL, 0, UnsupportedDataTypeCount) +

IIF(LOBColumnCount IS NULL, 0, LOBColumnCount) +

IIF(AssemblyTypeCount IS NULL, 0, AssemblyTypeCount) +

IIF(UDTColumnCount IS NULL, 0, UDTColumnCount) +

IIF(SparseColumnCount IS NULL, 0, SparseColumnCount) +

IIF(TriggerCount IS NULL, 0, TriggerCount) AS IssueCount

,CheckConstraintCount

,DefaultConstraintCount

,ForeignKeyCount

,UDTColumnCount

,LOBColumnCount

,ProblemIndexCount

,ComputedColumnCount

,IdentityViolations

,UnsupportedDataTypeCount

,FilestreamColumnCount

,AssemblyTypeCount

,SparseColumnCount

,TriggerCount

FROM @Tables

--WHERE OBJECT_NAME(table_id) = 'YourTable'

ORDER BY IssueCount DESC;