Zero free space

This is a continuation of Part 1 and Part 2 of this blog post series, related to resource issues/requirements for memory-optimized databases.

In this post, we’ll continue with simulating what happens to a memory-optimized database when all volumes run out of free space.

In my lab, I’m running Windows Server 2012. Let’s use Powershell to install the File System Resource Manager, which will allow us to create a quota for the relevant folder:

add-windowsfeature –name fs-resource-manager –includemanagementtools

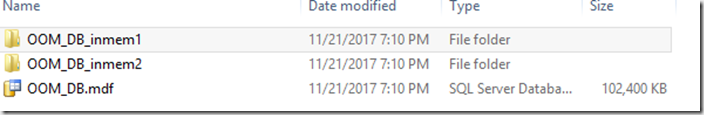

After installing the Windows feature we can set the quota for the folder, but we shouldn’t enable it just yet, because first we have to verify the current size of the folder.

On my server, I created a quota of 1.5GB, and then enabled it.

Now let’s INSERT rows into the table, in batches of 1000, until we reach the limit (the INSERT script is listed in Part 2, I’m trying to keep this post from getting too long).

Once the quota has been reached, we receive the dreaded 41822 error – this is what you’ll see when all of the volumes where your containers reside run out of free space (if even one of the volumes has free space, your workload can still execute).

![image_thumb[389]_thumb image_thumb[389]_thumb](http://nedotter.com/wp-content/uploads/2017/11/image_thumb389_thumb_thumb.png)

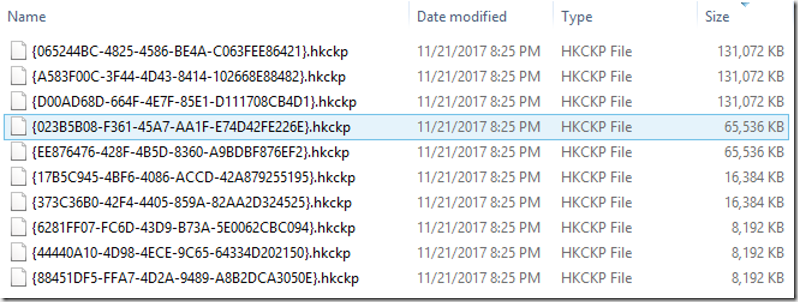

Just out of curiosity, we’ll verify how many rows actually got inserted. On my server, I’ve got 4,639 rows in that table, and the folder consumes 1.44GB. So theoretically, there was enough space on the drive to create more checkpoint files, but it seems as though the engine won’t just create what it can to fit in the available space. It’s more likely that the engine attempts to precreate a set of files, and it either succeeds or fails all at once, but I’ve not confirmed that.

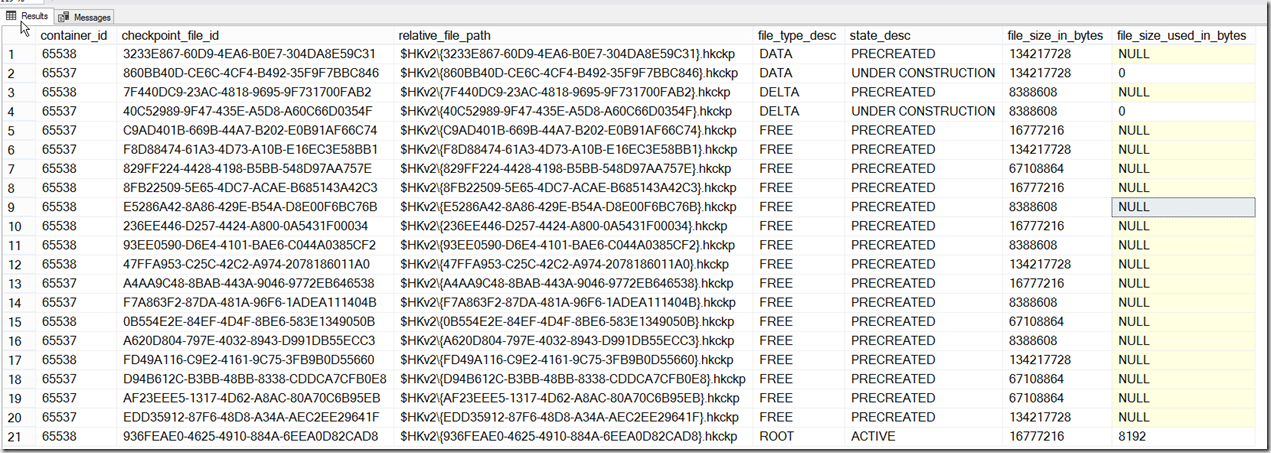

I disabled the quota, executed a manual CHECKPOINT, and ran the diagnostic queries again:

![image_thumb[26] image_thumb[26]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb26_thumb.png)

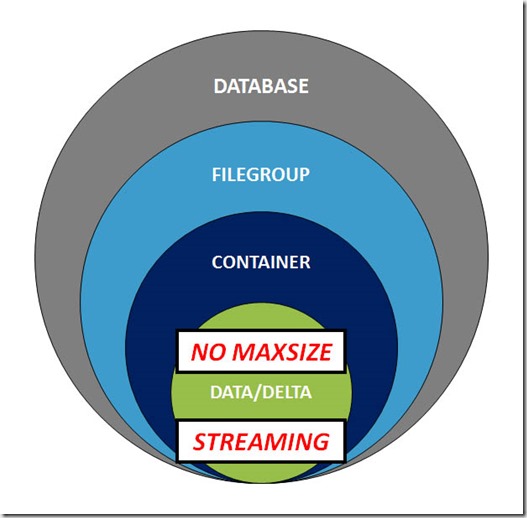

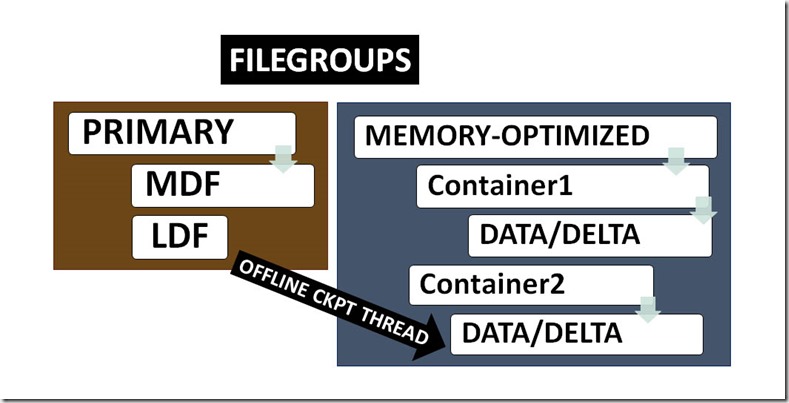

File Merge

Data files persist rows that reside in durable memory-optimized tables, and delta files store references to logically deleted rows. As more and more rows become logically deleted across different sets of CFPs, two things happen:

- the storage footprint increases (imagine that all data files have 50% of their rows logically deleted)

- query performance gets worse, because result sets must be filtered by entries in the delta files, which are increasing in size

Microsoft killed both of these birds with one stone: File Merge (aka Garbage Collection for data/delta files)

In the background – while your workload is running – the File Merge process attempts to combine adjacent sets of CFPs, and this is where we get to one of the file states that we didn’t cover in Part 1: MERGE TARGET

A file that has the fileType of MERGE TARGET is the new set of combined data/delta files from the File Merge process. Once the merge has completed, the MERGE TARGET transitions to ACTIVE, and as we stated earlier in this series, ACTIVE files can no longer be populated.

But what about the source files that the MERGE TARGET is derived from? After a CHECKPOINT, these files transition to WAITING FOR LOG TRUNCATION, and can be removed. It should be noted that it can take several checkpoints and transaction log backups for CFPs to transition to a state where they can actually be removed. That’s why Microsoft recommends 4x durable memory-optimized data size for the initial storage footprint.

In the images that follow, we can see that the formerly distinct transaction ranges of 101 to 200, and 201 to 300, have been combined into a single CFP, which has the range of 101 to 300.

![image_thumb[29] image_thumb[29]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb29_thumb.png)

![image_thumb[31] image_thumb[31]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb31_thumb.png)

![image_thumb[33] image_thumb[33]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb33_thumb.png)

Effect on backup size

File Merge – and the requisite file state changes that CFPs must go through – explain why backups for memory-optimized databases can be considerably larger than the amount of data stored in memory. Until CFPs go through the required state changes, they must be included in backups.

IOPS

The File Merge process requires both storage and IOPS, as it reads from both sets of CFPs, and writes to a new set. Let’s say your workload requires 500 IOPS to perform well. We’ve just added another 1,000 IOPS as a requirement for your workload to maintain the same level of performance: 500 IOPS each for the read and write components of File Merge. That’s why Microsoft recommends 3x workload IOPS for your memory-optimized storage.

Potential remedies, real and imagined

What happens to your memory-optimized database when all volumes run out of free space?

In my testing of inserts that breached the quota for the folder, I saw no affect on database status. However, if I created the database, set the quota to a much lower value, and then created a memory-optimized table, the database status became SUSPECT. In a real-world situation, with hundreds of gigabytes or more of memory-optimized data, the last thing you want to do is a database restore in order to return your database to a usable state.

I was able to set the database OFFLINE, and then ONLINE, and that cleared the SUSPECT status. But keep in mind, that setting the database OFFLINE/ONLINE will restream all your data, so there will be a delay in database recovery due to that.

USE master;

GO

ALTER DATABASE [OOM_DB] SET SINGLE_USER WITH ROLLBACK IMMEDIATE;

ALTER DATABASE [OOM_DB] SET OFFLINE;

GO

ALTER DATABASE [OOM_DB] SET ONLINE;

ALTER DATABASE [OOM_DB] SET MULTI_USER

GO

What can you do if your volumes run out of free space?

Well, in SQL 2014, your database went into “SUSPENDED” mode (not suspect), and it was offline, until perhaps you added more space and restarted the database (not sure, I didn’t test that). In SQL 2016+, the database goes into what’s known as “delete-only mode”, where you can still SELECT data, but modifying data is limited to deleting rows and/or dropping indexes/tables. Of course, SELECT, DELETE, and DROP to nothing to solve your problem: you need more free space.

When a database transitions to delete-only mode, that fact is written to the SQL errorlog:

[WARNING] Database ID: [9]. Checkpoint hit an error code 0x8300000a. Database is now in DeleteOnlyMode

You might think that you can issue CHECKPOINT manually, and do transaction log backups, hoping that File Merge will kick in. Or you could manually execute File Merge, with this uber-long thing:

EXEC sys.sp_xtp_checkpoint_force_garbage_collection <dbname>

But keep in mind that if there was no additional free space on the volumes to precreate CFPs, then it’s not likely that there will be enough free space to write a new set of CFPs for DBA-initiated File Merge.

The only thing you can do to remedy this situation is to either free up some space on the existing volumes, or create a new container on a new volume that has free space.

In Part 4, we’ll discuss memory in the same ways we’ve discussed storage – how it’s allocated, and what happens to your memory-optimized workload when you run out of it.

![image_thumb[389]_thumb image_thumb[389]_thumb](http://nedotter.com/wp-content/uploads/2017/11/image_thumb389_thumb_thumb.png)

![image_thumb[26] image_thumb[26]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb26_thumb.png)

![image_thumb[29] image_thumb[29]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb29_thumb.png)

![image_thumb[31] image_thumb[31]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb31_thumb.png)

![image_thumb[33] image_thumb[33]](http://nedotter.com/wp-content/uploads/2017/11/image_thumb33_thumb.png)