In-Memory OLTP: Optimizing data load

Inserting large sets of data to memory-optimized tables might be required when initially migrating data from:

harddrive-based or memory-optimized tables in

- the same database

- a separate database (not directly supported)

Some of the ways to load data into memory-optimized tables are:

- SSIS

- BULK INSERT

- bcp

- INSERT/SELECT

SELECT INTO is not supported for memory-optimized tables.

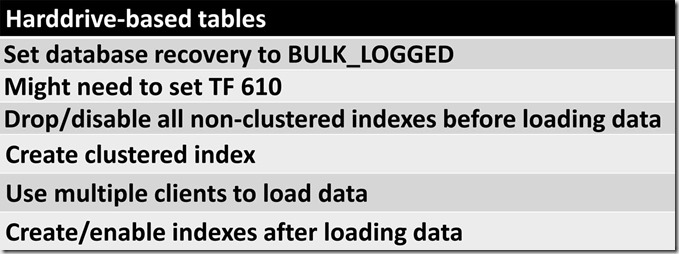

Harddrive-based tables

Let’s review the basic requirements to optimally load data to harddrive-based tables.

Recovery model: Most if not all OLTP databases run with the recovery model set to FULL. DBAs are taught from birth that when loading data, the recovery model should be set to BULK_LOGGED so that the transaction log doesn’t explode when you load data. The next transaction log backup will still include all the data that was loaded, but if you set the recovery model to BULK_LOGGED, you won’t require the extra storage to accommodate transaction log growth.

Itzik Ben-Gan wrote an excellent article on minimal logging here. It covers Trace Flag 610 and many other aspects of loading data into harddrive-based tables.

Indexes: For harddrive-based tables, we should have the minimum amount of indexes in place or enabled, because all index modifications are fully logged, which slows down the data load (TF 610 changes this behavior). You’ll still have to rebuild/create those indexes, and that will be logged, but it’s often faster to do that than load data with indexes in place, if for some reason TF 610 can’t be used.

Clustered indexes: For harddrive-based tables, we want to load the data sorted by the clustering key, so that we can eliminate any sorting.

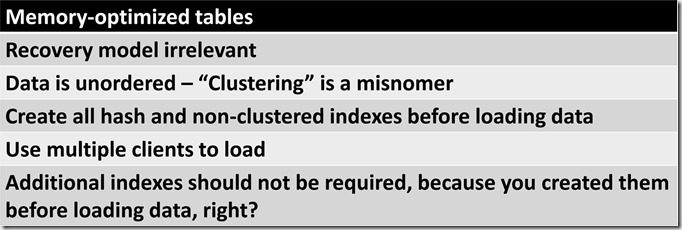

Memory-optimized tables

Basic requirements to optimally load data to memory-optimized tables:

Most DBAs are surprised to learn that DML changes to memory-optimized tables are always fully logged, regardless of the database recovery model. For INSERT/UPDATE/DELETE on memory-optimized tables, there is no such thing as “minimally logged”.

In SQL Server 2016 we finally have the ability to use the ALTER TABLE command to change memory-optimized tables. Most ALTER TABLE operations are executed in parallel and have the benefit of being minimally logged.

I did the following to verify that index creation is indeed minimally logged (based on SQL 2016 RC3**):

- Create a memory-optimized table and load 15 million rows

- Execute BACKUP LOG and CHECKPOINT (a few times)

- Execute SELECT COUNT(*) FROM fn_dblog(NULL, NULL), result is 30 rows

- ALTER TABLE/ADD NOT NULL column: 7 seconds

- Execute SELECT COUNT(*) FROM fn_dblog(NULL, NULL), result is 308 rows

- Execute BACKUP LOG and CHECKPOINT (a few times)

- Execute SELECT COUNT(*) FROM fn_dblog(NULL, NULL), result is 35 rows

- ALTER TABLE ADD INDEX: 13 seconds

- Execute SELECT COUNT(*) FROM fn_dblog(NULL, NULL), result is 118 rows

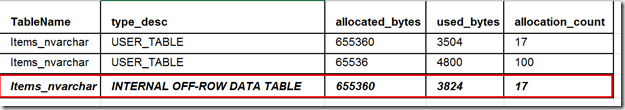

**If an index column is currently off-row, creating an index that references this column causes the column to be moved in-row. If the index is dropped, the column is again moved off-row. In both of these scenarios, ALTER TABLE is fully logged and single-threaded.

Then I executed a command that is definitely not minimally logged:

- ALTER TABLE/ADD NOT NULL nvarchar(max) column: 6 minutes, 52 seconds

- Execute SELECT COUNT(*) FROM fn_dblog(NULL, NULL), result is 210,280 rows

So from a logging perspective, it probably doesn’t make a lot of difference if non-clustered indexes are in place when data is loaded to memory-optimized tables. But concurrency will definitely suffer when creating indexes with ALTER TABLE/ADD INDEX, as the table is offline for the entire duration of any ALTER commands. That might be somewhat mitigated by the fact that you can now create multiple indexes, constraints, etc, with a single ALTER TABLE statement:

ALTER TABLE dbo. MyInMemTable ADD INDEX IX_Column1(Column1) , INDEX IX_Column2 (Column2)

“Clustered” indexes

Sadly, using the label “clustered” to describe any index on memory-optimized tables will confuse many people. For harddrive-based tables, a clustered index determines the physical order of data pages on disk, and clustered indexes for harddrive-based tables are the primary source of data – they are in fact the actual data for the table.

With regard to how data for memory-optimized tables is stored in memory, it’s not possible to have any form of ordering. Yes, you can create a “clustered” index on a memory-optimized table, but it is not the primary source of data for that table. The primary source of data is still the memory-optimized table in memory.

Loading

You should determine a way to break up the data loading process so that multiple clients can be executed in parallel. By client I mean SSMS, Powershell, SQLCMD, etc. This is no different than the approach you would take for loading data to harddrive-based tables.

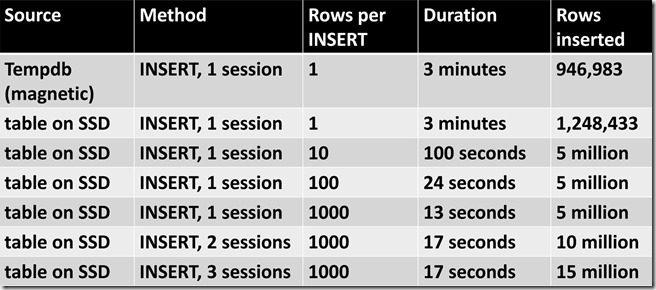

When reviewing the following chart, remember that natively compiled stored procedures won’t work for any scenario that includes both harddrive-based and memory-optimized tables.

|

Source |

Method |

Notes |

|

harddrive-based, same db |

INSERT/SELECT |

Supported, but excruciatingly painful with large data sets (single INSERT/SELECT statement), even if using a HASH index with bucket count properly configured. I succeeded in locking up my server several times with this approach. |

|

harddrive-based, different db |

INSERT/SELECT |

Not supported. You can use tempdb to stage the data, i.e. SELECT INTO ##temptable. Then process data with multiple clients. |

|

harddrive-based, files |

bcp out/ bcp in |

Supported |

|

harddrive-based, different db |

indexed memory-optimized table variable |

Supported, but not “transactional”. Modifications to rows in a memory-optimized table variable creates row versions (see note below). |

BULK INSERT is also supported, with the same restrictions as INSERT/SELECT (can’t go cross-database).

Different Source and Destination databases

a. If you are copying data between databases, i.e. Database A is the source for harddrive-based data you want to migrate, and Database B is the destination for memory-optimized data, you can’t use INSERT/SELECT. That’s because if there is a memory-optimized table as the source or destination of the INSERT/SELECT, you’ll be going “cross-database”, and that’s not allowed. You’ll either need to copy harddrive-based data to a global table (##) in TempDB, to an external file and then use BCP, or to a memory-optimized table variable (further explanation below).

b. Next, you’ll have to get the data into the memory-optimized tables. If using a ##TempTable, you can use stored procedures to process distinct key value ranges, allowing the procedures to be executed in parallel. For performance reasons, before calling these stored procedures, you’ll need to create an index on the primary key of the ##TempTable. If using stored procedures, you should determine the optimal batch size for your server/storage (see chart at the end of this post for my results using this method).

c. Natively compiled stored procedures won’t work in this scenario, because you can’t reference disk-based tables or TempDB from natively compiled stored procedures.

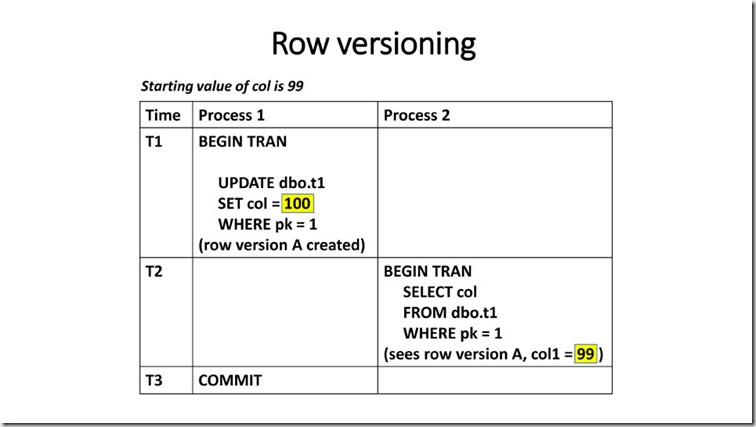

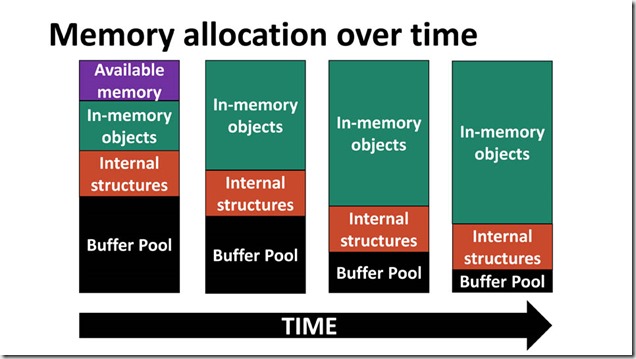

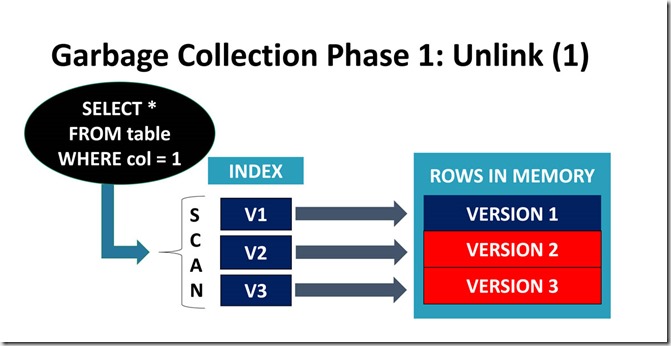

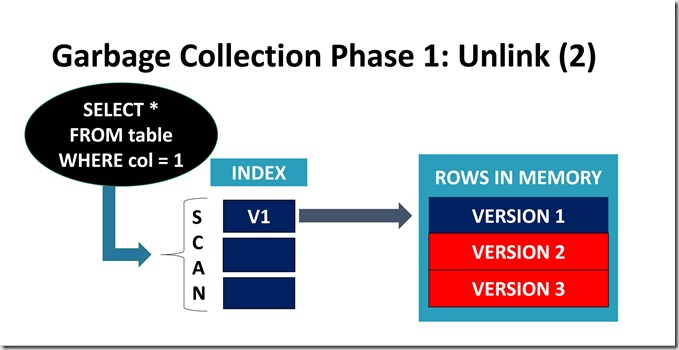

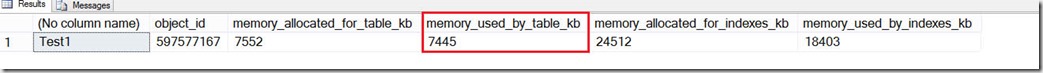

d. Instead of using a ##TempTable, it’s possible to insert data into an indexed memory-optimized table variable from the source database, and then use INSERT/SELECT from that variable into the destination database. That would solve the issue of making a second copy on disk, but be careful if you need to transform the data in the memory-optimized table variables, because updating data in memory-optimized table variables creates row versions, which will consume memory. That’s in addition to the memory required for the memory-optimized table variable itself.

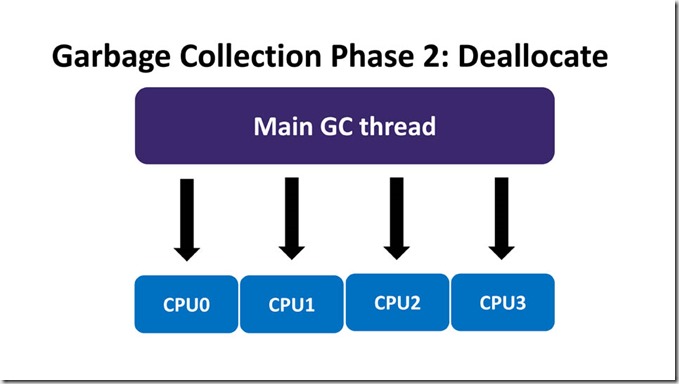

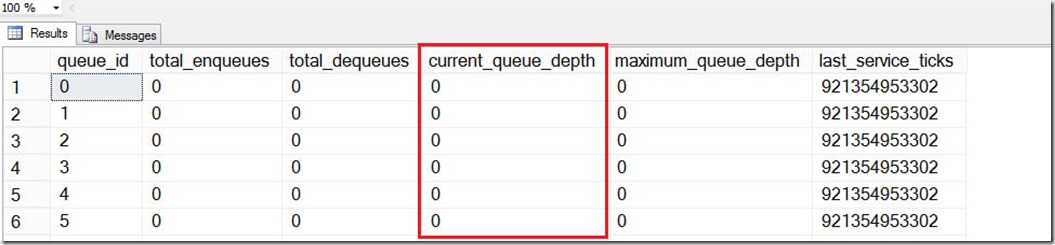

e. Garbage collection is a process that frees memory consumed by row versions, which were created as a result of changes to data in memory-optimized tables. Unfortunately, the garbage collection process does not free up memory consumed by memory-optimized table variables – those row versions will consume additional memory (until the memory-optimized table variable goes out of scope).

In order to use a natively compiled stored procedure for copying data from one table to another, the source and destination tables must both be memory-optimized, and both must reside in the same database.

Hardware/software used for testing

Software

- Windows Server 2012 Datacenter

- SQL 2016 RC3

- sp_configure max memory: 51200 MB

- Resource pool of 70%

Hardware

- Make/model: custom built

- Physical memory: 64GB

- Memory stick: Samsung M386A4G40DM0 32GB x 2

- Dual Intel Xeon E5-2630 v3 CPU

- Transaction log on Intel 750 PCIe SSD

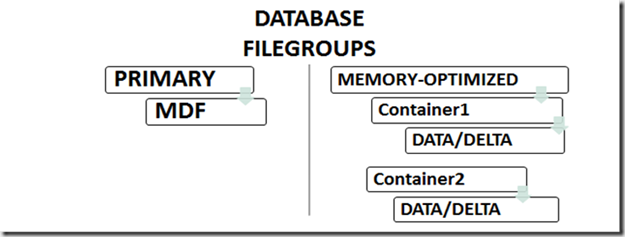

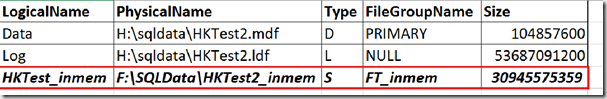

- Checkpoint File Pairs on OWC Mercury Accelsior PCIe SSD

Testing details:

- SELECT INTO ##TempTable was used to make the source data visible from within the memory-optimized database.

- An index was created on the primary key for ##TempTable (INT IDENTITY). The “table on SSD” in the chart below was stored on the Intel 750 PCIe SSD

- All inserts were done by calling an interpreted TSQL stored procedure which processed rows in batches, using “PrimaryKey BETWEEN val1 and val2”. No key generation was involved, because in the procedure, SET IDENTITY_INSERT was ON.

- There was a single HASH index on the memory-optimized table, with BUCKET_COUNT set to 10 million, in order to handle the initial data set of 5 million rows. Increasing the BUCKET_COUNT TO 30 million did not make any appreciable difference in the final test (with three sessions loading 5 million rows each).