This post will highlight the fairly wide gap in functionality between clustered columnstore indexes for on-disk tables compared to memory-optimized tables, for SQL 2016.

The first thing to note – which is not specific to columnstore for either type of table – is that Microsoft chose a really poor name for this type of index.

Why do I say that?

For the following reasons:

1. Traditional/regular clustered indexes physically sort data, but data within a columnstore index is unordered (that’s true for clustered and nonclustered columnstore indexes).

2. For a traditional/regular clustered index, the key columns are added to all nonclustered indexes. Columnstore indexes don’t have a “key” so nothing about them gets copied to any nonclustered indexes.

Now that we’ve established the issues with the name – and the potential confusion as a result – let’s get to the differences between clustered columnstore indexes for on-disk tables compared to memory-optimized tables.

The only type of columnstore index you are allowed to create on a memory-optimized table is a clustered columnstore. But as you’ll find out in a moment, that naming convention has it’s own set of issues when discussing memory-optimized tables…..

1. Primary data source

On-disk: When you create a clustered columnstore index on a disk-based table, it actually replaces the rowstore, and becomes the primary data source. Columnstore compression typically reduces the storage footprint greatly (often by ~90% or so), depending on the specific data types represented in the table.

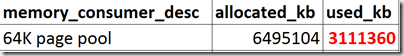

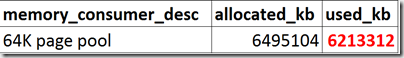

Memory-optimized: When you create a clustered columnstore index on a memory-optimized table, the rows in memory remain the primary data source. Creating a “clustered columnstore” index on a memory-optimized table actually increases the storage footprint (same for nonclustered HASH and RANGE indexes ) by about 10%. That’s why I say that all indexes on memory-optimized tables are nonclustered (because they are). Keep in mind that “storage footprint” in this case means “additional memory”. Despite what you may have read or heard elsewhere, clustered columnstore indexes on memory-optimized tables are persisted to disk, so that also take up space on your persistent storage. This reason this is done is to reduce the impact on RTO (see my blog post here for more info).

2. Schema changes

On-disk: After adding a clustered columnstore index to an on-disk table, you can use ALTER TABLE to modify the schema, adding or dropping both columns and nonclustered indexes.

Memory-optimized: After adding a clustered columnstore index to an memory-optimized table, you can not use ALTER TABLE to modify the schema. The schema becomes read-only, but of course you can still perform DML on the table.

3. LOB data

On-disk: You can create a clustered columnstore index on an on-disk table that has LOB data in vNext (not SQL 2014 or 2016).

Memory-optimized: As of vNext RC1, LOBs are not supported for memory-optimized tables, although this might change by the time we get to RTM.

(Update: 27 Aug 2017 – SQL 2017 does not support LOB columns for CCI on memory-optimized tables)

4. NC indexes

On-disk: After you create a clustered columnstore index on an on-disk table, you can use ALTER TABLE to create nonclustered indexes.

Memory-optimized: After you create a clustered columnstore index on a memory-optimized table, you can not use ALTER TABLE to create nonclustered indexes, but they can be created inline as part of the CREATE TABLE statement.

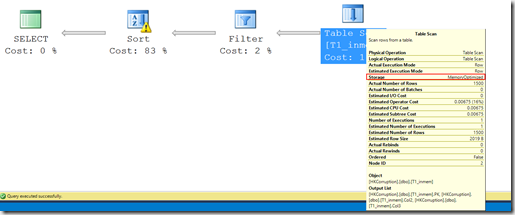

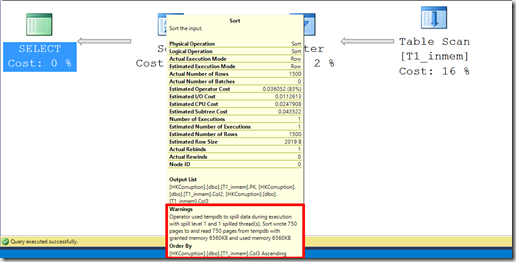

5. Parallelism

On-disk: When you query an on-disk table that has a columnstore index, the database engine can use parallelism to process the results more quickly.

Memory-optimized: When you query a memory-optimized table that has a columnstore index, the database engine can use parallelism to process the results more quickly, BUT, that statement is only true if you use interop. Natively compiled modules are always executed serially.

6. Archival compression

On-disk: There are two compression options for on-disk tables: columnstore and columnstore archival.

Memory-optimized: There is only one compression option for memory-optimized tables: columnstore.

7. Index maintenance

On-disk: You can use REBUILD or REORGANIZE for clustered columnstore indexes for on-disk tables.

Memory-optimized: REORGANIZE is not supported for memory-optimized tables, but in order to migrate rows from the virtual delta rowgroup into compressed rowgroups, you can use: sys.sp_memory_optimized_cs_migration. REBUILD is supported, but only for changing the bucket count for HASH indexes.

8. Filtered indexes

On-disk: For on-disk tables, it’s possible to create a nonclustered columnstore index with a filter.

Memory-optimized: For memory-optimized tables, it’s not possible to create a clustered columnstore index with a filter (and you cannot create a nonclustered columnstore index).

9. Temporary structures

On-disk: I’m not sure why you’d want to do it, but it’s possible to create a clustered columnstore index on a #temp table.

Memory-optimized: The equivalent of #temp tables would be memory-optimized table variables. It’s not possible to create clustered columnstore indexes on them, and besides, memory-optimized table variables are only able to be created with the body of a natively compiled module, and as we discussed earlier in this post, natively compiled modules cannot make use of columnstore indexes.

10. Other items to note

There are a few more wrinkles in the memory-optimized universe for columnstore indexes…..

Durability: The memory-optimized table must be durable (created with DURABILITY = SCHEMA_AND_DATA)

Off-row: You cannot create a clustered columnstore index on a memory-optimized table that has off-row columns

11. (special thanks to Niko Neugebauer)

On-disk: Columnstore contains Vertipaq optimisations (this changes for CCI when we build secondary nonclustered rowstore indexes).

Memory-optimzed: Columnstore does not contain Vertipaq optimisations.

12. Computed columns (special thanks to Niko Neugebauer)

On-disk: CCI supports computed columns

Memory-optimzed: CCI does not support computed columns (but memory-optimized tabes support computed columns and indexes on computed columns)