In-Memory OLTP can increase performance for a variety of workloads. For example, if your workload creates many #TempTables, ##TempTables, or @TableVariables, they all have to be allocated in TempDB, and it’s possible that TempDB itself is a bottleneck. Some DBAs/Developers mistakenly believe that @TableVariables reside only in memory, which is not true, and has been proven many times in blog posts like this and this, by Wayne Sheffield and Gail Shaw respectively.

Microsoft has described the ways in which temp tables and table variables can be replaced by using memory-optimized objects here. It’s true that we can now have truly memory-resident temporary objects, and that if your workload was bottlenecked due to TempDB io or allocation issues (GAM/SGAM/PFS), using memory-optimized tables variables can increase workload throughput. However, what’s not mentioned in that article is the impact of choosing different types of indexes for the table variable, which has the effect of using 2x memory for the table variable. For large numbers of rows this can even result in an out-of-memory condition. This would be particularly relevant if you are migrating a large number of rows from harddrive-based tables to memory-optimized tables, and the source and destination databases are different.

Creating a memory-optimized table variable is a two step process:

1. create a table type

2. create a variable of that type

Example (note that the PK column of the table type is defined as PRIMARY KEY NONCLUSTERED HASH):

CREATE TYPE dbo.InMemType AS TABLE

(

[PK] [INT] NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 20000000)

,[Col1] [INT] NOT NULL

,[Col2] [NVARCHAR](255) NOT NULL

,[Col3] [UNIQUEIDENTIFIER] NULL

,[Col4] [INT] NULL

,[Col5] [INT] NULL

,[Col6] [VARCHAR](6) NULL

,[Col7] [NVARCHAR](255) NOT NULL

,[Col8] [NVARCHAR](255) NOT NULL

,[Col9] [NVARCHAR](255) NOT NULL

,[Col10] [DATETIME] NOT NULL

,[Col11] [NVARCHAR](1640) NULL

,[Col12] [NVARCHAR](1640) NULL

);

go

SET NOCOUNT ON;

DECLARE @InMemVariable dbo.InMemType

In the following script, ’64K page pool’ indicates the amount of memory allocated to memory-optimized table variables

SET NOCOUNT ON;

DECLARE @InMemVariable dbo.InMemType

DECLARE @InMemVariable dbo.InMemType ;

DECLARE @MaxValue INT = 1000000

DECLARE @Increment INT = 1

DECLARE @Counter INT = 1

SELECT memory_consumer_id

,memory_consumer_type_desc

,memory_consumer_desc

,object_id

,OBJECT_SCHEMA_NAME(object_id) + '.' + OBJECT_NAME(object_id) [Table_Name]

,index_id

,CAST(allocated_bytes / 1024. AS NUMERIC(15, 2)) [allocated_kb]

,CAST(used_bytes / 1024. AS NUMERIC(15, 2)) [used_kb]

FROM sys.dm_db_xtp_memory_consumers

WHERE memory_consumer_desc = '64K page pool'

WHILE (@Counter <= @MaxValue)

BEGIN

INSERT @InMemVariable

(

[Col1]

,[Col2]

,[Col3]

,[Col4]

,[Col5]

,[Col6]

,[Col7]

,[Col8]

,[Col9]

,[Col10]

,[Col11]

,[Col12]

)

SELECT [Col1]

,[Col2]

,[Col3]

,[Col4]

,[Col5]

,[Col6]

,[Col7]

,[Col8]

,[Col9]

,[Col10]

,[Col11]

,[Col12]

FROM <harddrive-based table>

WHERE PK = @Counter

SET @Counter = @Counter + 1

END

SELECT COUNT(*)

FROM @InMemVariable

SELECT memory_consumer_id

,memory_consumer_type_desc

,memory_consumer_desc

,object_id

,OBJECT_SCHEMA_NAME(object_id) + '.' + OBJECT_NAME(object_id) [Table_Name]

,index_id

,CAST(allocated_bytes / 1024. AS NUMERIC(15, 2)) [allocated_kb]

,CAST(used_bytes / 1024. AS NUMERIC(15, 2)) [used_kb]

FROM sys.dm_db_xtp_memory_consumers

WHERE memory_consumer_desc = '64K page pool'

The PK column of the table type is defined as PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT = 20000000)

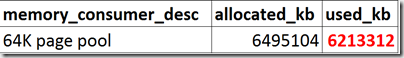

If you instead define the PK column to use a RANGE index (non-HASH index), my testing has shown that memory allocation for the variable is almost exactly double that of using the HASH index.

CREATE TYPE dbo.InMemType AS TABLE

(

[PK] [INT] NOT NULL PRIMARY KEY NONCLUSTERED

,[Col1] [INT] NOT NULL

,[Col2] [NVARCHAR](255) NOT NULL

,[Col3] [UNIQUEIDENTIFIER] NULL

,[Col4] [INT] NULL

,[Col5] [INT] NULL

,[Col6] [VARCHAR](6) NULL

,[Col7] [NVARCHAR](255) NOT NULL

,[Col8] [NVARCHAR](255) NOT NULL

,[Col9] [NVARCHAR](255) NOT NULL

,[Col10] [DATETIME] NOT NULL

,[Col11] [NVARCHAR](1640) NULL

,[Col12] [NVARCHAR](1640) NULL

);

go

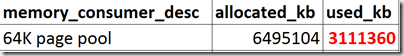

HASH index, 64 page pool:

RANGE index, 64 page pool:

Not related to index choice – but still significant – is that the memory allocated to memory-optimized table variables (and their row versions, if any) is not released until the variable goes out of scope. Garbage collection for row versions ignores memory-optimized table variables.

Updating all rows in the variable will create row versions, and at least in this case, the row versions did not consume a lot of additional memory. I blogged about row versions in here.

If you think Microsoft should fix this issue bug with RANGE indexes on memory-optimized table variables, please upvote this connect item.

Thanks for posting this. As I’m starting to look more into in-memory stuff, and specifically memory-optimized table variables, this will definitely come in handy.

I have a question for you that’s kinda-sorta-not-really related. I notice that most examples seem to load data into in-memory tables and variables with a loop instead of a bulk load or an insert/select combo. Is this because it can handle individual inserts so much faster?

In my first tests with in-memory, I was trying to bulk load tables and was getting abysmal load times. I’m thinking I should have been emulating an OLTP type workflow and just doing individual inserts instead.

Hi Eric,

Singleton inserts will also be slow – you’ve got to find the right batch size for your system, and run in parallel so that loading a large set of data doesn’t take forever.

Please check this blog post about optimal data load techniques:

http://nedotter.com/archive/2016/05/in-memory-oltp-optimizing-data-load/

If that doesn’t answer your questions, feel free to reach out again.