With regard to backup and recovery, databases that contain durable memory-optimized tables are treated differently than backups that contain only disk-based tables. DBAs must be aware of the differences so that they don’t mistakenly affect production environments and impact SLAs.

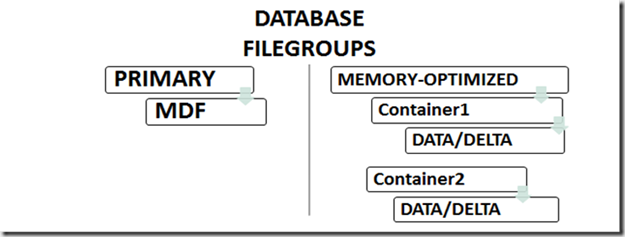

The following image describes files/filegroups for databases that contain durable memory-optimized data:

Data/delta files are required so that memory-optimized tables can be durable, and they reside in Containers, which is a special type of folder. Containers can reside on different drives (more about why you’d want to do that in a bit).

Database recovery occurs due to the following events:

- Database RESTORE

- Database OFFLINE/ONLINE

- Restart of SQL Server service

- Server boot

- Failover, including

- FCI

- Availability Groups*

- Log Shipping

- Database mirroring

The first thing to be aware of is that having durable memory-optimized data in a database can affect your Recovery Time Objective (RTO).

Why?

Because for each of the recovery events listed above, SQL Server must stream data from the data/delta files into memory as part of recovery.

There’s no getting around the fact that if you have lots of durable memory-optimized data, even if you have multiple containers on different volumes, recovery can take a while. That’s especially true in SQL 2016 because Microsoft has raised the limit on the amount of memory-optimized data per database from 256GB to multiple TB (yes, terabytes, limited only by the OS). Imagine waiting for your multi-terabytes of data to stream into memory, and how that will impact your SLAs (when SQL Server streams data to memory, you’ll see a wait type of WAIT_XTP_RECOVERY).

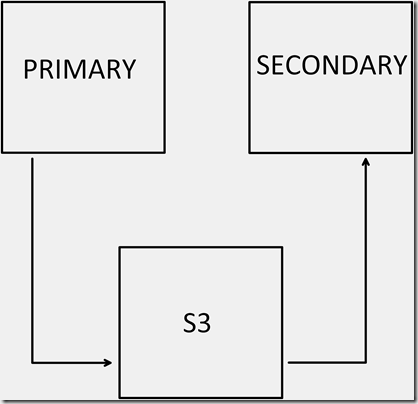

*One exception to the impact that failover can have is when you use Availability Groups with a Secondary replica. In that specific scenario, the REDO process keeps memory-optimized tables up to date in memory on the Secondary, which greatly reduces failover time.

Indexes for memory-optimized tables have no physical representation on disk. That means they must be created as part of database recovery, further extending the recovery timeline.

CPU bound recovery

The recovery process for memory-optimized data uses one thread per logical CPU, and each thread handles a set of data/delta files. That means that simply restoring a database can cause the server to be CPU bound, potentially affecting other databases on the server.

During recovery, SQL Server workloads can be affected by increased CPU utilization due to:

- low bucket count for hash indexes – this can lead to excessive collisions, causing inserts to be slower

- nonclustered indexes – unlike static HASH indexes, the size of nonclustered indexes will grow as the data grows. This could be an issue when SQL Server must create those indexes upon recovery.

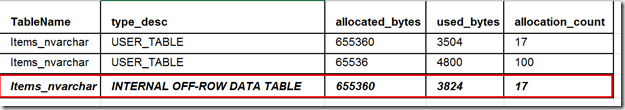

- LOB columns – new in SQL 2016, SQL Server maintains a separate internal table for each LOB column. LOB usage is exposed through the sys.memory_optimized_tables_internal_attributes and sys.dm_db_xtp_memory_consumers views. LOB-related documentation for these views has not yet been released.

You can see from the following output that SQL 2016 does indeed create a separate internal table per LOB column. The Items_nvarchar table has a single NVARCHAR(MAX) column. It will take additional time during the recovery phase to recreate these internal per-column tables.

Corruption

Because they don’t have any physical representation on disk (except for durability, if you so choose), memory-optimized tables are completely ignored by both CHECKDB and CHECKTABLE. There is no allocation verification, or any of the myriad other benefits that come from running CHECKDB/CHECKTABLE on disk-based tables. So what is done to verify that everything is ok with your memory-optimized data?

CHECKSUM of data/delta files

When a write occurs to a file, a CHECKSUM for the block is calculated and stored with the block. During database backup, the CHECKSUM is calculated again and compared to the CHECKSUM value stored with the block. If the comparison fails, the backup fails (no backup file gets created).

Restore/Recovery

If a backup file contains durable memory-optimized data, there is currently no way to interrogate that backup file to determine how much memory is required to successfully restore.

I did the following to test backup/recovery for a database that contained durable memory-optimized data:

- Created a database with only one durable memory-optimized table

- Generated an INSERT only workload (no merging of delta/delta files)

- INSERTed rows until the size of the table in memory was 20GB

- Created a full database backup

- Executed RESTORE FILELISTONLY for that backup file

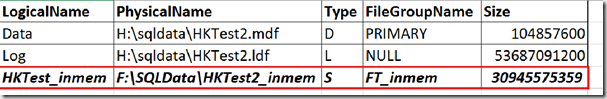

The following are the relevant columns from the FILELISTONLY output. Note the last row, the one that references the memory-optimized filegroup:

There are several things to be aware of here:

- The size of the memory-optimized data in the backup is 10GB larger than memory allocated for the table (the combined size of the data/delta files is 30GB, hence the extra 10GB)

- The Type for the memory-optimized filegroup is ‘S’. Within backup files, Filestream, FileTable and In-Memory OLTP all have the same value for Type, which means that database backups that contain two or more types of streaming data don’t have a way to differentiate resource requirements for restoring. A reasonable naming convention should help with that.

- It is not possible to determine how much memory is required to restore this database. Usually the amount of memory is about the same size as the data/delta storage footprint, but in this case the storage footprint was overestimated by 50%, perhaps due to file pre-creation. There should be a fix in SQL 2016 RC0 to reduce the size of pre-created data/delta files for initial data load. However, this does not help with determining memory requirements for a successful restore.

Now let’s have a look at a slightly different scenario — imagine that you have a 1TB backup file, and that you are tasked with restoring it to a development server. The backup file is comprised of the following:

- 900GB disk-based data

- 100GB memory-optimized data

The restore process will create all of the files that must reside on disk, including files for disk-based data (mdf/ndf/ldf) and files for durable memory-optimized data (data/delta files). The general steps that the restore process performs are:

- Create files to hold disk-based data (size = 900GB, so this can take quite a while)

- Create files for durable memory-optimized data (size = 100GB)

- After all files are created, 100GB of durable memory-optimized data must be streamed from the data files into memory

But what if the server you are restoring to only has 64GB of memory for the entire SQL Server instance? In that case, the process of streaming data to memory will fail when there is no more memory available to stream data. Wouldn’t it have been great to know that before you wasted precious time creating 1TB worth of files on disk?

When you ask SQL Server to restore a database, it determines if there is enough free space to create the required files from the backup, and if there isn’t enough free space, the restore fails immediately. If you think that Microsoft should treat databases containing memory-optimized data the same way (fail immediately if there is not enough memory to restore), please vote for this Azure UserVoice item.