A subset of the tools available for troubleshooting interpreted stored procedures are available for troubleshooting natively compiled procedures.

The following table highlights the differences

| Method | Interpreted | Natively compiled |

| Recompile specific statements | Supported | Not supported – but theoretically not required, due to the impossibility of parameter sniffing |

| Execute procedure with RECOMPILE | Supported | Not supported – but theoretically not required, due to the impossibility of parameter sniffing |

| Estimated/Actual plan | Supported | “Estimated Plan” makes no sense in the context of natively compiled stored procedures. The plan that will be executed is available from SHOWPLAN_XML or by clicking (“Estimated Plan” in SSMS, but it’s not “estimated”) |

| Remove plan from plan cache | Supported | Not supported – plans for natively compiled stored procedures are not stored in the plan cache. |

| DBCC FREEPROCCACHE | Supported | No effect, because plans for natively compiled stored procedures are not stored in the plan cache. |

| SET STATISTICS IO ON | Supported | Not supported/required, because there is no such thing as IO for memory-optimized tables. |

| SET STATISTICS TIME ON | Supported | Supported, but might not be 100% accurate, because execution times less than 1 millisecond are reported as 0 seconds. Total_worker_time may not be accurate if many executions take less than 1 millisecond. |

| SET FMTONLY | Supported | Not supported, but you can use sp_describe_first_result_set. |

| SHOWPLAN_XML | Supported | Supported |

| SHOWPLAN_ALL | Supported | Not supported |

| SHOWPLAN_TEXT | Supported | Not supported |

| Mismatched datatypes | xEvents hekaton_slow_parameter_passing, with reason = parameter_conversion. | |

| Named parameters, i.e.

EXEC dbo.Proc @Param1 = @Param1 |

Supported | Supported, but not recommended, due to performance impact. You can track this type of execution with xEvents hekaton_slow_parameter_passing, with reason = named_parameters. |

If any SET options are in effect, statistics are gathered at the procedure level and not at the statement level.

Note 1: Statement-level execution statistics can be gathered with xEvents by capturing the sp_statement_completed event. They can also be seen using Query Store (detailed in a future post).

Note 2: Due to the nature of working with memory-optimized tables in general, it’s likely that you will have to implement retry logic. Because of this, and also because of feature limitations within the natively compiled space, Microsoft suggest using an interpreted TSQL wrapper when calling natively compiled stored procedures.

The following query references sys.dm_exec_query_stats to get statistics for natively compiled procedures:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

SELECT st.objectid ,OBJECT_NAME(st.objectid) AS 'object name' ,SUBSTRING(st.text, ( qs.statement_start_offset / 2 ) + 1, ( ( qs.statement_end_offset - qs.statement_start_offset ) / 2 ) + 1) AS 'query text' ,qs.creation_time ,qs.last_execution_time ,qs.execution_count ,qs.total_worker_time ,qs.last_worker_time ,qs.min_worker_time ,qs.max_worker_time ,qs.total_elapsed_time ,qs.last_elapsed_time ,qs.min_elapsed_time ,qs.max_elapsed_time ,qs.total_rows ,qs.min_rows ,qs.max_rows ,qs.last_rows FROM sys.dm_exec_query_stats qs CROSS APPLY sys.dm_exec_sql_text(sql_handle) st WHERE st.dbid = DB_ID() AND st.objectid IN ( SELECT object_id FROM sys.sql_modules WHERE uses_native_compilation = 1 ) ORDER BY qs.total_worker_time DESC; |

Note 3: The documentation for sys.dm_exec_query_stats states that the total_rows, min_rows, max_rows, and last_rows columns cannot be NULL, but NULL is still returned. A Connect item has been filed to have those columns return 0.

Parallelism

Parallelism is supported for memory-optimized tables for all index types. While that statement is true when using interpreted stored procedures that reference memory-optimized tables, unfortunately it’s not true when using natively compiled stored procedures.

Actual vs. Estimated

These terms have confused generations of SQL Server technologists.

For natively compiled procedures, enabling “Actual Plan” in SSMS does not return any plan information, but still executes the procedure. Enabling “Estimated Plan” in SSMS for natively compiled procedures is the same as setting SHOWPLAN_XML ON, but does not actually execute the stored procedure. The plan that will be executed is displayed.

Removing plans from the cache

For interpreted stored procedures, DBAs have the ability to remove an offending plan from the plan cache. This is not possible with natively compiled stored procedures, because the plan is not stored in the plan cache.

DBCC FREEPROCCACHE

If you execute DBCC FREEPROCCACHE and expect your natively compiled plans to magically disappear, you will no doubt be disappointed. That’s because DBCC FREEPROCCACHE has no effect on compiled modules, as they are not stored in the plan cache that’s used for interpreted TSQL. But executing DBCC FREEPROCCACHE will of course remove all existing plans for interpreted TSQL from the plan cache (so don’t do that…unless you’re really, really sure you want to recompile all of your interpreted procs).

Parameter sniffing

With interpreted stored procedures, parameter sniffing can severely impact performance. Parameter sniffing is not possible for natively compiled procedures, because all natively compiled procedures are executed with OPTIMIZE FOR UNKNOWN.

Statistics

SQL 2016 has the ability to automatically update statistics on memory-optimized tables if your database is has a compatibility level of at least 130. If you don’t want to depend on SQL Server to determine when stats should be updated, you can update statistics manually (and we no longer have to use FULLSCAN, as was the case in SQL 2014). Statistics for index key columns are created when an index is created.

Database upgrades and statistics

As mentioned earlier, if your database was set to compatibility level 120 (SQL 2014), and you want to take advantage of auto-update statistics, you must change the compatibility level to 130. But statistics still won’t be auto-updated unless you manually update them one last time.

Recompilation

When you create a natively compiled stored procedure, it gets compiled, and execution plans for the queries contained within the procedure are created. As the data changes, those execution plans will be based on older statistics, and might not perform at the highest possible level. Many people think that if you update statistics, natively compiled stored procedures will magically recompile. Unfortunately, this is not correct – natively compiled stored procedures are only recompiled under the following circumstances:

- When you execute sp_recompile (this should be done after statistics are updated)

- Database restart

Database restart includes at least the following events:

- Database RESTORE

- OFFLINE/ONLINE of database

- Failover (FCI or Availability Group)

- SQL Server service restart

- Server boot

Unlike memory-optimized tables – which are all created, compiled, and placed into memory upon database restart – natively compiled stored procedures are recompiled when first executed. This reduces the amount of time required for database recovery, but affects the first-time execution of the procedure.

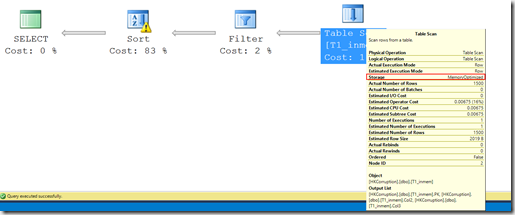

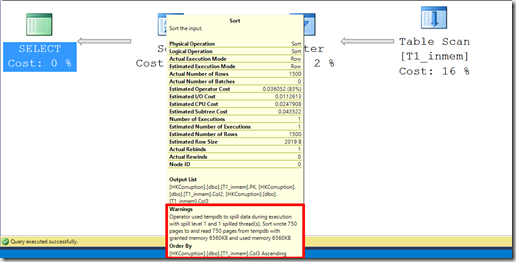

Plan operators

For traditional tables (harddrive-based), the number of pages expected to be returned by an operator has a significant impact on the cost, and therefore affects the plan. Since memory-optimized tables are not stored in pages, this type of calculation is irrelevant.

For memory-optimized tables, the engine keeps track of how many rows are in each table. This means that estimates for full table scans and index scans are always accurate (because they are always known). For memory-optimized tables, the most important factor for costing is the number of rows that will be processed by a single operator. Older statistics might reference row counts that are no longer valid, and this can affect plan quality.

Nested execution

Prior to SQL 2014, it was not possible for one natively compiled stored procedure to call another natively compiled stored procedure. This restriction has been lifted in SQL 2016.

We will continue troubleshooting natively compiled stored procedures in a future post.