Much of what you see in the blogosphere pertaining to log shipping and AWS references an on-premises server as part of the topology. I searched far and wide for any information about how to setup log shipping between AWS VMs, but found very little. However, I have a client that does business solely within AWS, and needed a solution for HA/DR that did not include on-premises servers.

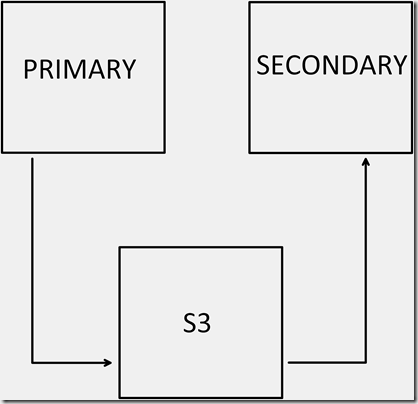

Due to network latency issues and disaster recovery requirements (the log shipping secondary server must reside in a separate AWS region), it was decided to have the Primary server push transaction logs to S3, and the Secondary server pull from S3. On the Primary, log shipping would occur as usual, backing up to a local share, with a separate SQL Agent job responsible for copying the transaction log backups to S3. Amazon has created a set of Powershell functionality embodied in AWS Tools for Windows Powershell, which can be downloaded here. One could argue that Amazon RDS might solve some of the HA/DR issues that this client faced, but it was deemed too restrictive.

S3 quirks

When files are written to S3, the date and time of when the file was last modified is not retained. That means when the Secondary server polls S3 for files to copy, it cannot rely on the date/time from S3. Also, it is not possible to set the LastModified value on S3 files. Instead, a list of S3 file name must be generated, and compared to files that reside on the Secondary. If the S3 file does not reside locally, it must be copied.

Credentials – AWS Authentication

AWS supports different methods of authentication:

From an administrative perspective, I don’t have and don’t want access to the client’s AWS administratove console. Additionally, I needed a solution that I could easily test and modify without involving the client. For this reason, I chose an authentication solution based on AWS profiles that are stored within the Windows environment, for a specific Windows account (in case you’re wondering, the profiles are encrypted).

Windows setup

- create a Windows user named SQLAgentCmdProxy

- create a password for the SQLAgentCmdProxy account (you will need this later)

The SQLAgentCmdProxy Windows account will be used as a proxy in for SQL Agent job steps, which will execute Powershell scripts. (NOTE: if you change the drive letters and or folder names, you will need to update the scripts in this post)

from a cmd prompt, execute the following:

md c:\Powershell md c:\Backups\logs

Powershell setup

(The scripts in this blog post should be run on the Secondary log shipping server, but with very little effort, they can be modified to run on the Primary and push transaction log backups to S3.)

The following scripts assume you already have an S3 bucket that contains one or more transaction log files that you want to copy to the Secondary server (they must have the extension “trn”, otherwise you will need to change -Match “trn” in the script below). Change the bucket name to match your bucket, and if required, also change the name of the region. Depending on the security configuration for your server, you may also need to execute “Set-ExecutionPolicy RemoteSigned” in a Powershell prompt as a Windows Administrator, prior to executing any Powershell scripts.

After installing AWS Tools for Windows Powershell, create a new Powershell script with the following commands

import-module "C:\Program Files (x86)\AWS Tools\PowerShell\AWSPowerShell\AWSPowerShell.psd1" Set-AWSCredentials -AccessKey <AccessKey> -SecretKey <SecretKey> -StoreAs default

Be sure to fill in your AccessKey and SecretKey values in the script above, then save the script as C:\Powershell\Setup.ps1. When this script is executed, it will establish an AWS environment based on the proxy for the SQL Agent job step.

The next step is to create a new Powershell script with the following commands:

Set-StrictMode -Version Latest

import-module "C:\Program Files (x86)\AWS Tools\PowerShell\AWSPowerShell\AWSPowerShell.psd1"

Initialize-AWSDefaults -ProfileName default -Region us-east-1

$LSBucketName = "test-bucket"

$S3Objects = Get-S3Object -bucketname $LSBucketName

$FilesOfInterest = $S3objects | Select Key | Where-Object key -Match "trn"

$LogShippingFolder = "C:\Backups\LogShipping"

$ListOfCopiedFiles= @()

foreach ($S3File in $FilesOfInterest ) {

$FileWeWant = $LogShippingFolder + "\" + $S3File.Key

$LocalExists = Test-Path ($FileWeWant)

if ($LocalExists -eq $false) {

Copy-S3Object -BucketName $LSBucketName -Key $S3File.Key -LocalFile $FileWeWant

$ListOfCopiedFiles = $ListOfCopiedFiles + $S3file.Key

}

}

# set LastWriteTime on the files just copied, because S3 does not preserve it

Set-Location $LogShippingFolder

$Format = 'yyyyMMddHHmmss'

foreach ($FileItem in $ListOfCopiedFiles) {

$File = Get-Item $FileItem

if ($File.Extension -eq ".bak" -or $File.Extension -eq ".dif" -or $File.Extension -eq ".trn") {

$FileNameString = $File.BaseName.substring($File.BaseName.IndexOf("_") + 1)

$FileNameDateTime = [DateTime]::ParseExact($FileNameString, $Format, [Globalization.CultureInfo]::InvariantCulture)

if ($File.LastWriteTime -ne $FileNameDateTime) {

$File.LastWriteTime = $FileNameDateTime

}

}

}

Again you should substitute your bucket and region names in the script above. Note that after the files are copied to the Secondary, the LastModifiedTime is updated based on the file name (log shipping uses the UTC format when naming transaction log backups). Save the Powershell script as C:\powershell\CopyS3TRNToLocal.ps1

SQL Server setup

- create a login for the SQLAgentCmdProxy Windows account (for our purposes, we will make this account a member of the sysadmin role, but you should not do that in your production environment)

- create a credential named TlogCopyFromS3Credential, mapped to SQLAgentCmdProxy (you will need the password for SQLAgentCmdProxy in order to accomplish this)

- create a SQL Agent job

- create a job step, Type: Operating System (CmdExec), Runas: TlogCopyFromS3Credential

Script for the above steps

CREATE LOGIN [<DomainName>\SQLAgentCmdProxy] FROM WINDOWS

CREATE CREDENTIAL TlogCopy WITH IDENTITY = '<DomainName>\SQLAgentCmdProxy', SECRET = '<SecretPasswordHere';

GO

sp_addsrvrolemember @loginame = [<DomainName>\SQLAgentCmdProxy], @rolename = 'sysadmin'

GO

USE msdb;

GO

EXEC msdb.dbo.sp_add_proxy @proxy_name=N'TlogCopyFromS3Credential',@credential_name=N'TlogCopy', @enabled=1;

GO

EXEC msdb.dbo.sp_grant_proxy_to_subsystem @proxy_name=N'TlogCopyFromS3Credential', @subsystem_id=3;

GO

EXEC dbo.sp_add_job

@job_name = N'CopyTlogsFromS3'

,@enabled = 1

,@description = N'CopyTlogsFromS3';

GO

EXEC msdb..sp_add_jobserver

@job_name = 'CopyTlogsFromS3'

,@server_name = '(local)'

GO

EXEC sp_add_jobstep

@job_name = N'CopyTlogsFromS3'

,@step_name = N'Copy tlogs from S3'

,@subsystem = N'CMDEXEC'

,@proxy_name = N'TlogCopyFromS3Credential'

,@command = N'C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe "C:\powershell\Setup.ps1"';

- Change references to <DomainName> to be your domain or local server name, and save the script

- Execute the job

- Open the job and navigate to the job step. In the Command window, change the name of the Powershell script from Setup.ps1 to CopyS3TRNToLocal.ps1

- Execute the job

- Verify the contents of the C:\Backups\logs folder – you should now see the file(s) from your S3 bucket

Troubleshooting credentials

If you see errors for the job that resemble this:

InitializeDefaultsCmdletGet-S3Object : No credentials specified or obtained from persisted/shell defaults.

then recheck the AccessKey and SecretKey values that you ran in the Setup.ps1 script. If you find errors in either of those keys, you’ll need to rerun the Setup.ps1 file (change the name of the file to be executed in the SQL Agent job, and re-run the job). If you don’t find any errors in the AccessKey or SecretKey values, you might have luck with creating the AWS profile for the proxy account manually (my results with this approach have been mixed). Since profiles are specific to a Windows user, we can use runas /user:SQLAgentCmdProxy powershell_ise.exe to launch the Powershell ISE, and then execute the code from Setup.ps1.

You can verify that the Powershell environment uses the SQL proxy account by temporarily adding $env:USERNAME to the script.

S3 Maintenance

When you setup log shipping on the Primary or Secondary, you can specify the retention period, but S3 file maintenance needs to be a bit more hands on. The following script handles purging local and S3 files with the extension “trn” that are more than 30 days old, based on UTC file name.

Set-StrictMode -Version Latest

import-module "C:\Program Files (x86)\AWS Tools\PowerShell\AWSPowerShell\AWSPowerShell.psd1"

Initialize-AWSDefaults -ProfileName default -Region us-east-1

$S3BucketName = "test-bucket"

$S3Objects = Get-S3Object -bucketname $S3BucketName

$FilesOfInterest = $S3objects | Select Key, LastModified

$TRNFolder = "C:\Backups\LogShipping"

$Now = get-date

$TRNPurgeDate = $Now.AddDays(-30)

# We must jump through some hoops because the timestamp in S3 will not match UTC date/time value embedded in the trn file.

# The date/time portion of the file name must be converted to a datetime value, so that we can compare it to $TRNPurgeDate

foreach ($S3File in $FilesOfInterest ) {

if ($S3File.Key -match "trn") {

$Trn = $S3File.Key

$Underscore = $Trn.IndexOf("_") + 1

$Period = $Trn.IndexOf(".")

$fmt = 'yyyyMMddHHmmss'

$DateTimeString = $Trn.Substring($Underscore, $Period - $Underscore)

$DateTimeStringDateTime = [DateTime]::ParseExact($DateTimeString,$Fmt, [Globalization.CultureInfo]::InvariantCulture)

if ($DateTimeStringDateTime -lt $TRNPurgeDate) {

$S3File.Key

Remove-S3Object -BucketName $S3BucketName -Key $S3File.Key -Force #-WhatIf

}

}

}

Set-Location $TRNFolder

Get-ChildItem | foreach {

if ($_.Extension -match "trn" -and $_.LastWriteTime -lt $TRNPurgeDate) {

Write-Host "Deleting tlog $_"

Remove-Item $_ #-WhatIf

}

}

Save the script, and create a SQL Agent job to execute it. You’ll also have to reference the proxy account as in the prior SQL Agent job.

Don’t forget

If you use log shipping between AWS VMs as outlined in this post, you will need to disable/delete the SQL Agent copy jobs on the Primary and Secondary servers.

Disaster Recovery

All log shipping described here occurs within the AWS cloud. An alternative would be to ship transaction logs to a separate storage service (that does not use S3), or a completely separate cloud. At the time of this writing, this blog post by David Bermingham clearly describes many of the issues and resources associated with HA/DR in AWS.

“Hope is not a strategy”

HA/DR strategies require careful planning and thorough testing. In order to save money, some AWS users may be tempted to create a Secondary instance with small memory and CPU requirements, hoping to be able to resize the Secondary when failover is required. For patching, the ‘”resize it when we need it” approach might work, but for Disaster Recovery it can be fatal. Be forewarned that Amazon does not guarantee the ability to start an instance of a specific size, in a specific availability zone/region, unless the instance is reserved. If the us-east region has just gone down, everyone with Disaster Recovery instances in other AWS regions will attempt to launch them. As a result, it is likely that some of those who are desperately trying to resize and then launch their unreserved Disaster Recovery instances in the new region will receive the dreaded “InsufficientInstanceCapacity” error message from AWS. Even in my limited testing for this blog post, I encountered this error after resizing a t1-micro instance to r2.xlarge, and attempting to start the instance (this error persisted for at least 30 minutes, but the web is full of stories of people waiting multiple hours). You could try to launch a different size EC2 instance, but there is no guarantee you will have success (more details on InstanceCapacity can be found here).

The bottom line is that if you run a DR instance that is not reserved, at the precise moment you require more capacity it may be unavailable. That’s not the type of hassle you want when you’re in the middle of recovering from a disaster.

I am indebted to Mike Fal (b) for reviewing this post.